TITLE: Two-Stream Convolutional Networks for Action Recognition in Videos

AUTHOR: Simonyan, Karen and Zisserman, Andrew

FROM: NIPS2014

CONTRIBUTIONS

- A two-stream ConvNet combines spatial and temporal networks.

- A ConvNet trained on multi-frame dense optical flow is able to achieve a good performance in spite of small training dataset

- Multi-task training procedure benefits performance on different datasets.

METHOD

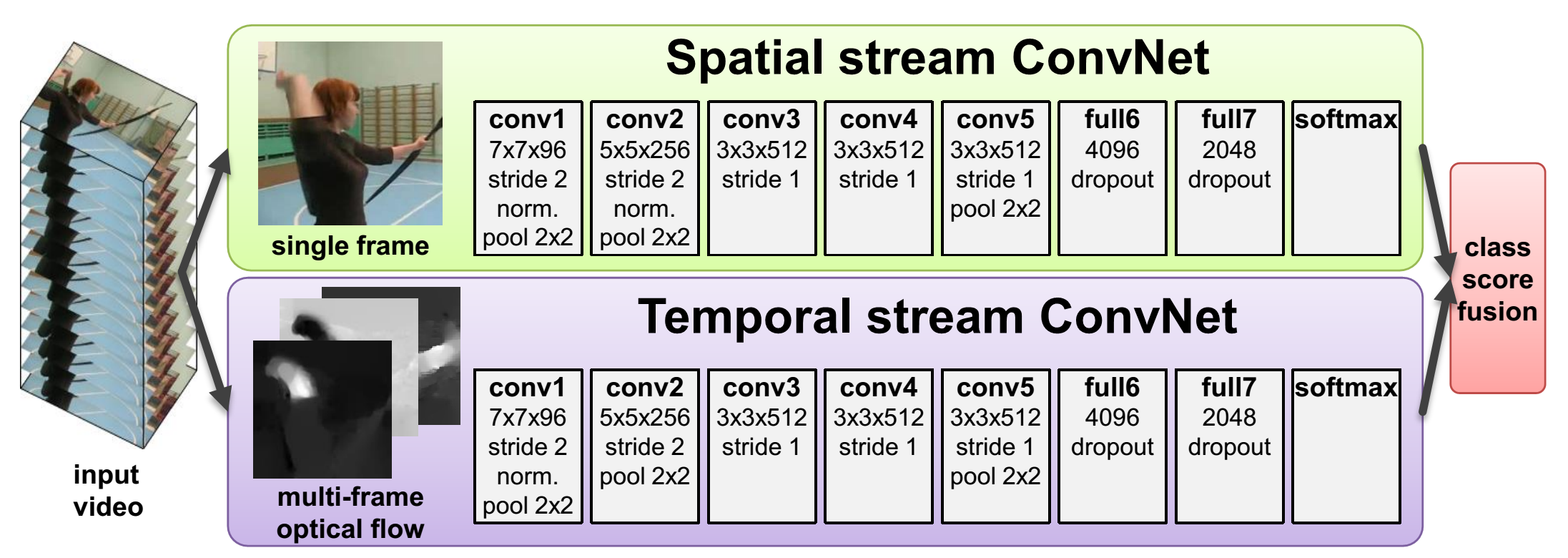

Two-stream architecture convolutional network:

- Spatial stream ConvNet: take a still frame as input and perform action recognition in this single frame.

- Temporal stream ConvNet: take a 2L-channel optical flow/trajectory stacking corresponding to the still frame as input and perform action recognition in this multi-channel input.

- The two outputs of the streams are concated as a feature to train a SVM classifier to fuse them.

SOME DETAILS

- Mean flow subtraction is utilized to eliminate displacements caused by camera movement.

- At test stage, 25 frames (time points) are extracted and their corresponding 2L-channel stackings are sent to the network. In addition, 5 patches and their flips are extracted in space domain.

ADVANTAGES

- Simulate bio-structure of human visual cortex.

- Competitive performance with the state of the art representations in spite of small size of training dataset.

- CNN with convolution filters could generalize hand-crafted features.

DISADVANTAGES

- Can not localize action in neither spatial nor temporal domain.