TITLE: Weakly Supervised Cascaded Convolutional Networks

AUTHOR: Ali Diba, Vivek Sharma, Ali Pazandeh, Hamed Pirsiavash, Luc Van Gool

ASSOCIATION: KU Leuven, Sharif Tech., UMBC, ETH Zürich

FROM: arXiv:1611.08258

CONTRIBUTIONS

A new architecture of cascaded networks is proposed to learn a convolutional neural network handling the task without

expensive human annotations.

METHOD

This work trains a CNN to detect objects using image level annotaion, which tells what are in one image. At training stage, the input of the network are 1) original image, 2) image level labels and 3) object proposals. At inference stage, the image level labels are excluded. The object proposals can be generated by any method, such as Selective Search and EdgeBox. Two differenct cascaded network structures are proposed.

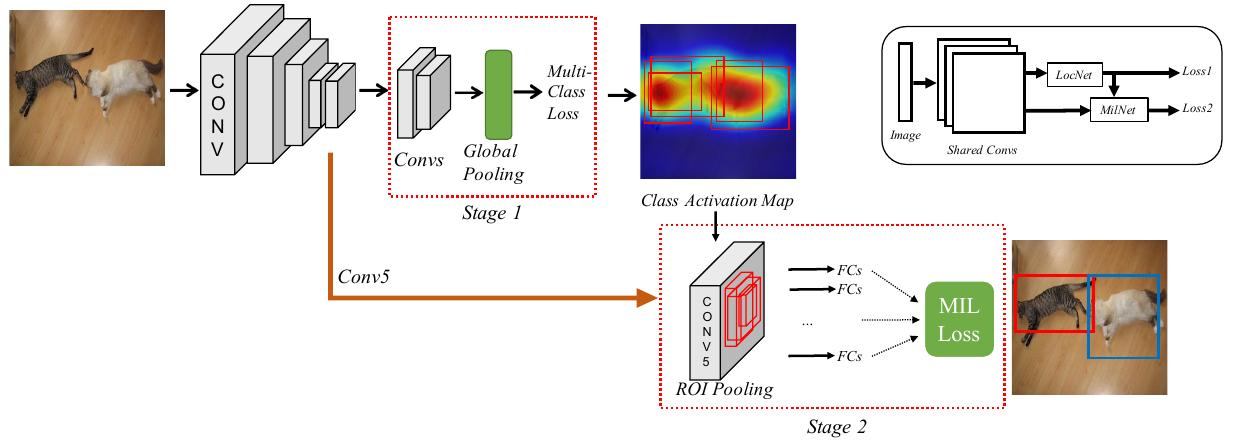

Two-stage Cascade

The two-stage cascade network structure is illustrated in the following figure.

The first stage is a location network, which is a fully-convolutional CNN with a global average pooling or global maximum pooling. In order to learn multiple classes for single image, an independent loss function for each class is used. The class activation maps are used to select candidate boxes.

The second stage is multiple instance learning network. Given a bag for instances

and a label set

where each is one of the condidate boxes, is the number of candidate box, is the number of categories and , the probabilities and loss can be defined as

Im my understanding, only the boxes with the most confidence in each category will be punished if they are wrong. Besides, the equations in the paper have some mistakes.

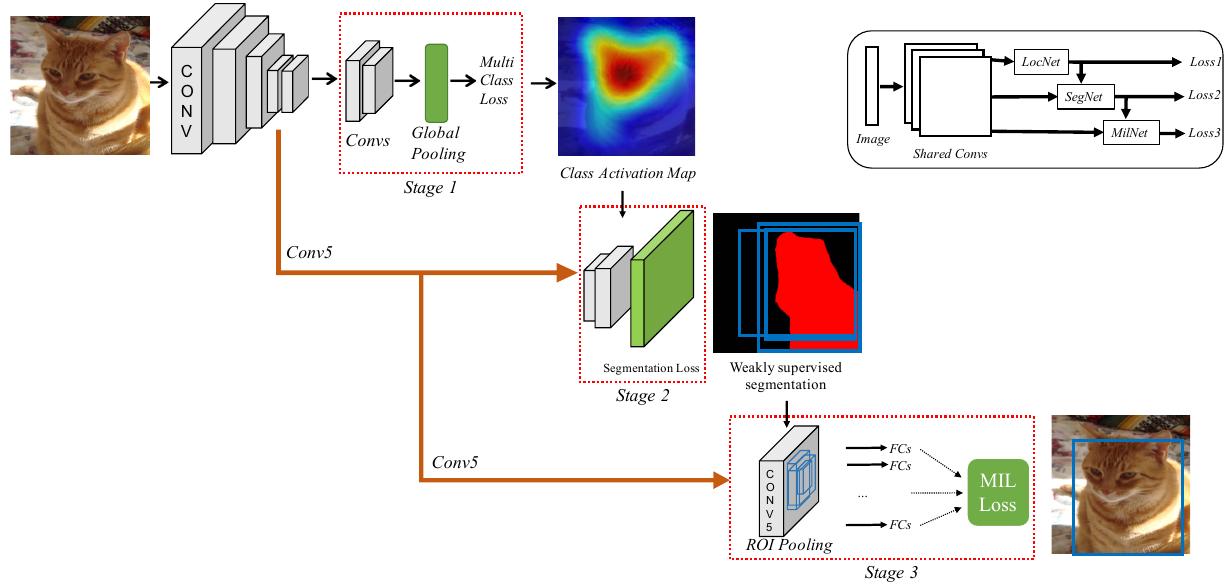

Three-stage Cascade

The three-stage cascade network structure adds a weak segmentation network between the two stages in the two-stage cascade network. It is illustrated in the following figure.

The weak segmentation network uses the results of the first stage as supervision signal. is defined as the CNN score for pixel $i$ and class in image $I$. The score is normalized using softmax

Considering as the label set for image $I$ , the loss function for the weakly supervised segmentation network is given by

where is the supervision map for the segmentation from the first stage.

SOME IDEAS

This work requires little annotation. The only annotation is the image level label. However, this kind of training still needs complete annotation. For example, we want to detect 20 categories, then we need a 20-d vector to annotate the image. What if we only know 10/20 categories’ status in one image?