TITLE: MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications

AUTHOR: Andrew G. Howard, Menglong Zhu, Bo Chen, Dmitry Kalenichenko, Weijun Wang, Tobias Weyand, Marco Andreetto, Hartwig Adam

ASSOCIATION: Google

FROM: arXiv:1704.04861

CONTRIBUTIONS

- A class of efficient models called MobileNets for mobile and embedded vision applications is proposed, which are based on a streamlined architecture that uses depthwise separable convolutions to build light weight deep neural networks

- Two simple global hyper-parameters that efficiently trade off between latency and

accuracy are introduced.

MobileNet Architecture

The core layer of MobileNet is depthwise separable filters, named as Depthwise Separable Convolution. The network structure is another factor to boost the performance. Finally, the width and resolution can be tuned to trade off between latency and accuracy.

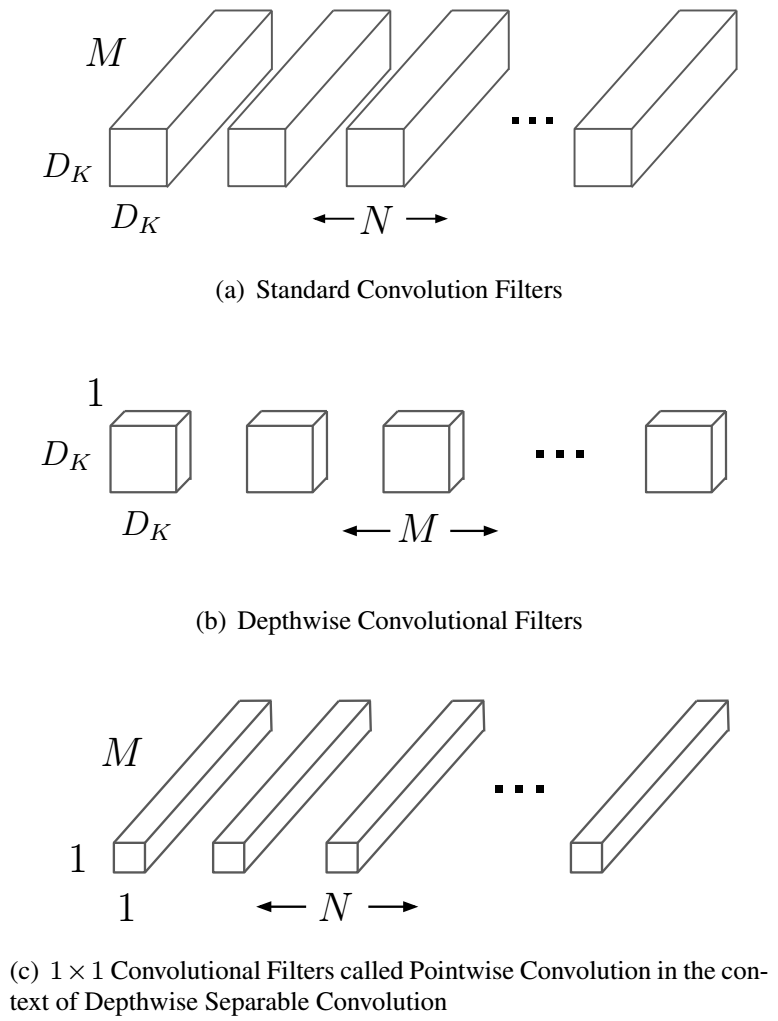

Depthwise Separable Convolution

Depthwise separable convolutions which is a form of factorized convolutions which factorize a standard convolution into a depthwise convolution and a $1 \times 1$ convolution called a pointwise convolution. In MobileNet, the depthwise convolution applies a single filter to each input channel. The pointwise convolution then applies a $ 1 \times 1 $ convolution to combine the outputs the depthwise convolution. The following figure illustrates the difference between standard convolution and depthwise separable convolution.

The standard convolution has the computation cost of

Depthwise separable convolution costs

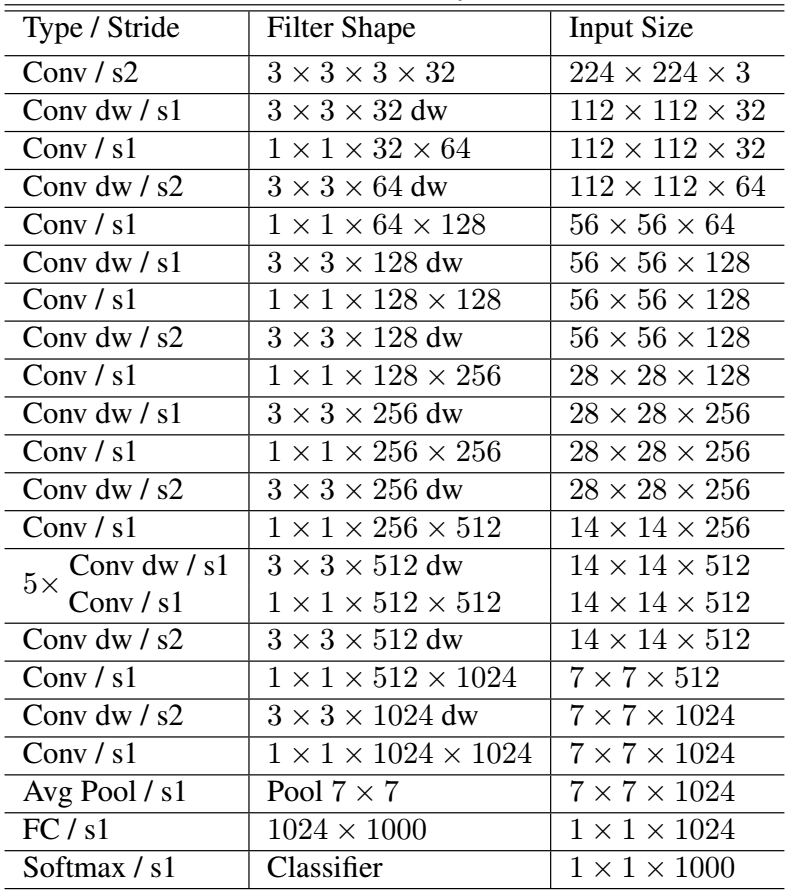

MobileNet Structure

The following table shows the structure of MobileNet

Width and Resolution Multiplier

The Width Multiplier is used to reduce the number of the channels. The Resolution Multiplier is used to reduce the input image of the network.

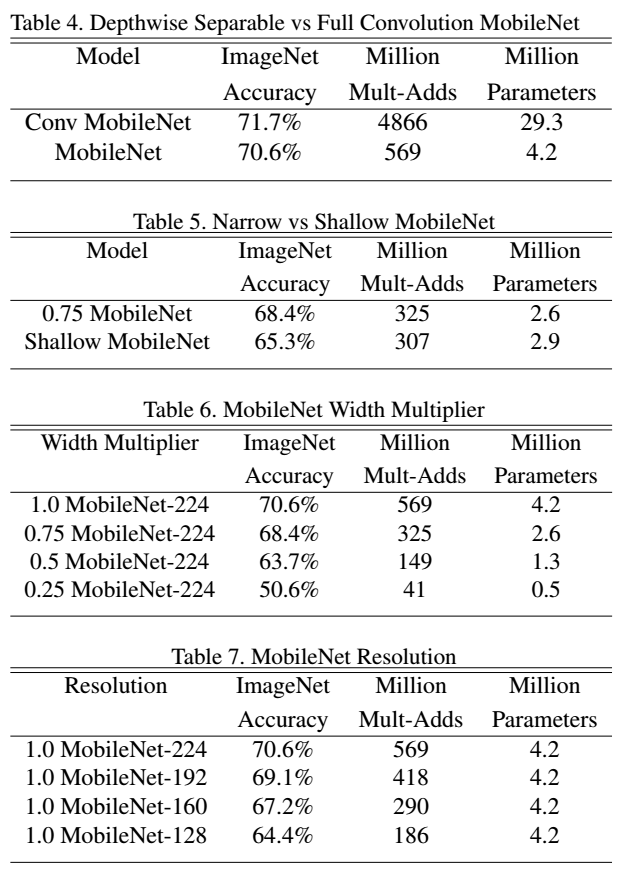

Comparison