TITLE: DSOD: Learning Deeply Supervised Object Detectors from Scratch

AUTHOR: Zhiqiang Shen, Zhuang Liu, Jianguo Li, Yu-Gang Jiang, Yurong Chen, Xiangyang Xue

ASSOCIATION: Fudan University, Tsinghua University, Intel Labs China

FROM: arXiv:1708.01241

CONTRIBUTIONS

- DSOD is presented that can train object detection networks from scratch with state-of-the-art performance.

- A set of principles are introduced and validated to design efficient object detection networks from scratch through step-by-step ablation studies.

- DSOD can achieve state-of-the-art performance on three standard benchmarks (PASCAL VOC 2007, 2012 and MS COCO datasets) with realtime processing speed and more compact models.

METHOD

The critical limitations when adopting the pre-trained networks in object detection include:

- Limited structure design space. The pre-trained network models are mostly from ImageNet-based classification task, which are usually very heavy — containing a huge number of parameters. Existing object detectors directly adopt the pre-trained networks, and as a result there is little flexibility to control/adjust the network structures. The requirement of computing resources is also bounded by the heavy network structures.

- Learning bias. As both the loss functions and the category distributions between classification and detection tasks are different, it will lead to different searching/optimization spaces. Therefore, learning may be biased towards a local minimum which is not the best for detection task.

- Domain mismatch. As is known, fine-tuning can mitigate the gap due to different target category distribution. However, it is still a severe problem when the source domain (ImageNet) has a huge mismatch to the target domain such as depth images, medical images, etc.

DSOD Architecture

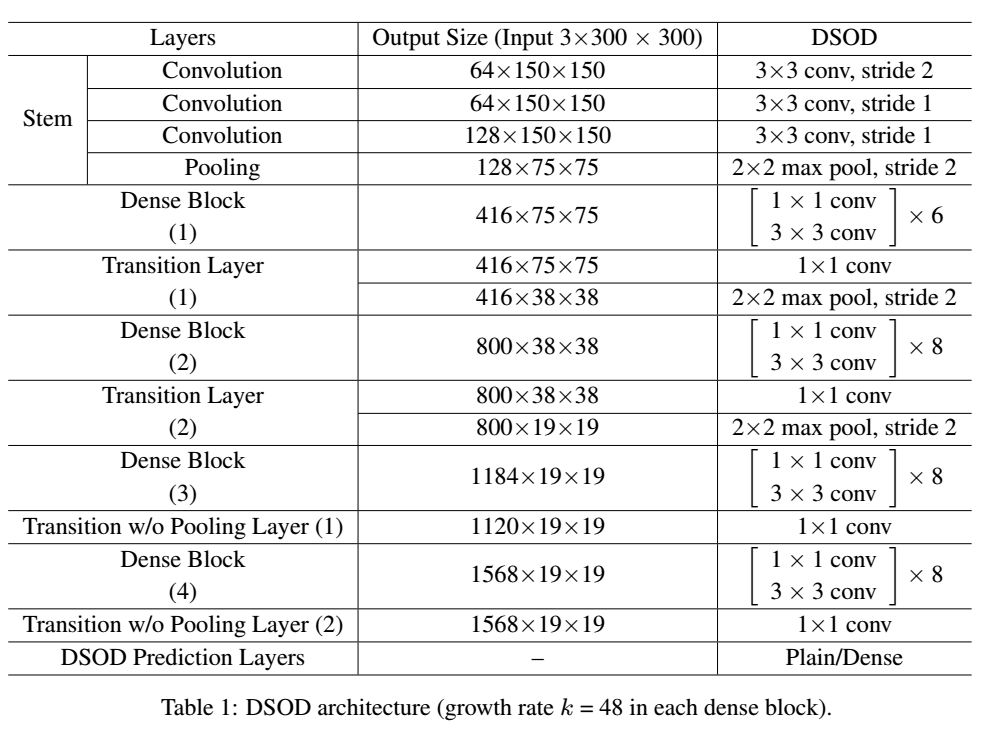

The following table shows the architecture of DSOD

The proposed DSOD method is a multi-scale proposal-free detection framework similar to SSD. The design principles are as follows:

- Proposal-free. The author observes that only the proposal-free method such as SSD and YOLO can converge successfully without the pre-trained models. It may be because the RoI pooling layer in the proposal based methods hinders the gradients being smoothly back-propagated from region-level to convolutional feature maps.

- Deep Supervision. The central idea is to provide integrated objective function as direct supervision to the earlier hidden layers, rather than only at the output layer. These “companion” or “auxiliary” objective functions at multiple hidden layers can mitigate the “vanishing” gradients problem.

- Stem Block. Motivated by Inception-v3 and v4, the author defines stem block as a stack of three $3 \times 3$ convolution layers followed by a $2 \times 2$ max pooling layer. This simple stem structure can reduce the information loss from raw input images compared with the original design in DenseNet.

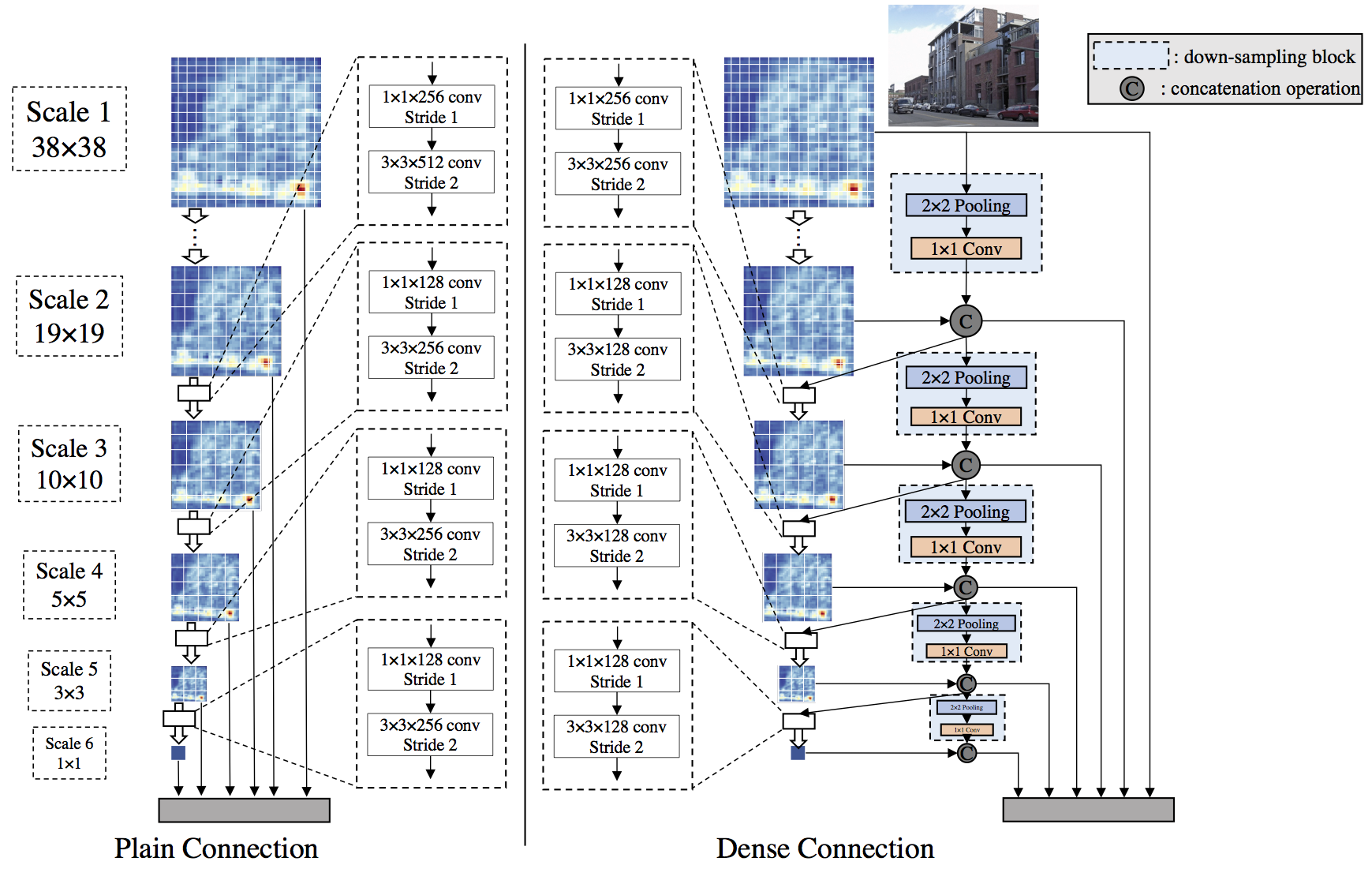

- Dense Prediction Structure The following figure illustrates the comparison of the plain structure (as in SSD) and the proposed dense structure in the front-end sub-network. Dense structure for prediction fuses multi-scale information for each scale. Each scale outputs the same number of channels for the prediction feature maps. In DSOD, in each scale (except scale 1), half of the feature maps are learned from the previous scale with a series of conv-layers, while the remaining half feature maps are directly down-sampled from the contiguous high-resolution feature maps.

Ablation Study

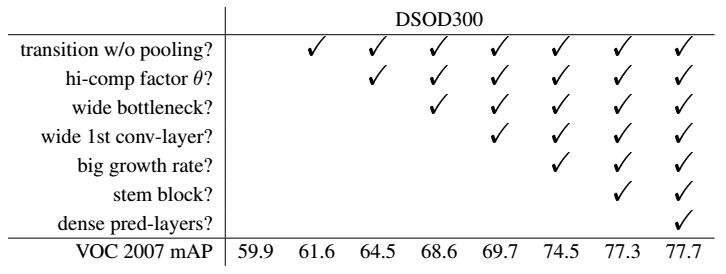

The following table shows the effectiveness of various designs on VOC 2007 test set.

transition w/o pooling increases the number of dense blocks without reducing the final feature map resolution. hi-comp factor in Transition Layers is the factor to reduce feature maps. wide bottlenect means more channels in bottleneck layers. wide 1st conv-layer means that that more channels are kept in the first conv-layer. big growth rate is a parameter used in DenseNet to increase the number of channels in the dense block.

Some Ideas

If we train Faster R-CNN in non-approximate joint training way, maybe we can also train the detector from scratch.