TITLE: Be Your Own Prada: Fashion Synthesis with Structural Coherence

AUTHOR: Shizhan Zhu, Sanja Fidler, Raquel Urtasun, Dahua Lin, Chen Change Loy

ASSOCIATION: The Chinese University of Hong Kong, University of Toronto, Vector Institute, Uber Advanced Technologies Group

FROM: ICCV2017

CONTRIBUTION

A method that can generate new outfits onto existing photos is developped so that it can

- retain the body shape and pose of the wearer,

- roduce regions and the associated textures that conform to the language description,

- Enforce coherent visibility of body parts.

METHOD

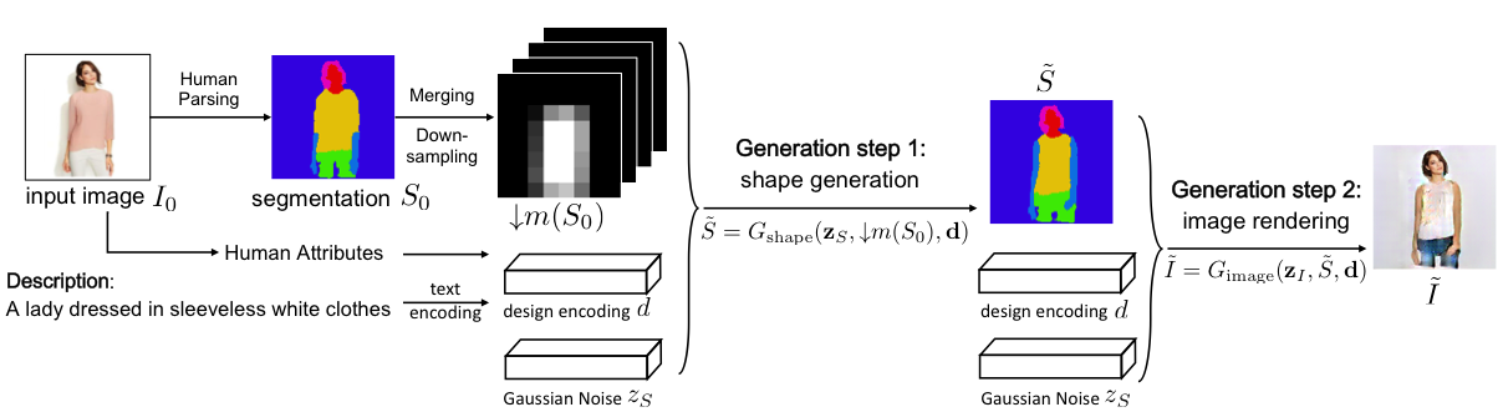

Given an input photograph of a person and a sentence description of a new desired outfit, the model first generates a segmentation map $\tilde{S}$ using the generator from the first GAN. Then the new image is rendered with another GAN, with the guidance from the segmentation map generated in the previous step. At test time, the final rendered image is obtained with a forward pass through the two GAN networks. The workflow of this work is shown in the following figure.

The first generator $G{shape}$ aims to generate the desired semantic segmentation map by conditioning on the spatial constraint , the design coding , and the Gaussian noise $$\textbf{z}{S}S{0}m$$, width of $n$ and channel of $L$, which represents the number of labels. $\downarrow m(S_0)$ downsamples and merges $S{0}$ so that it is agnostic of the clothing worn in the original image, and only captures information about the user’s body. Thus $G{shape}$ can generate a segmentation map $\tilde{S}$ with sleeves from a segmentation map $S{0}$ without sleeves.

The second generator $G_{image}$ renders the final image $\tilde{I}$ based on the generated segmentation map $\tilde{S}$, design coding $\textbf{d}$, and the Gaussian noise $\textbf{z}_I$.