TITLE: Object Detection from Video Tubelets with Convolutional Neural Networks

AUTHER: Kai Kang, Wanli Ouyang, Hongsheng Li, Xiaogang Wang

ASSOCIATION: The Chinese University of Hong Kong

FROM: arXiv:1604.04053

CONTRIBUTIONS

- A complete multi-stage framework is proposed for object detection in videos.

- A special temporal convolutional neural network is proposed to incorporate temporal information into object detection from video.

METHOD

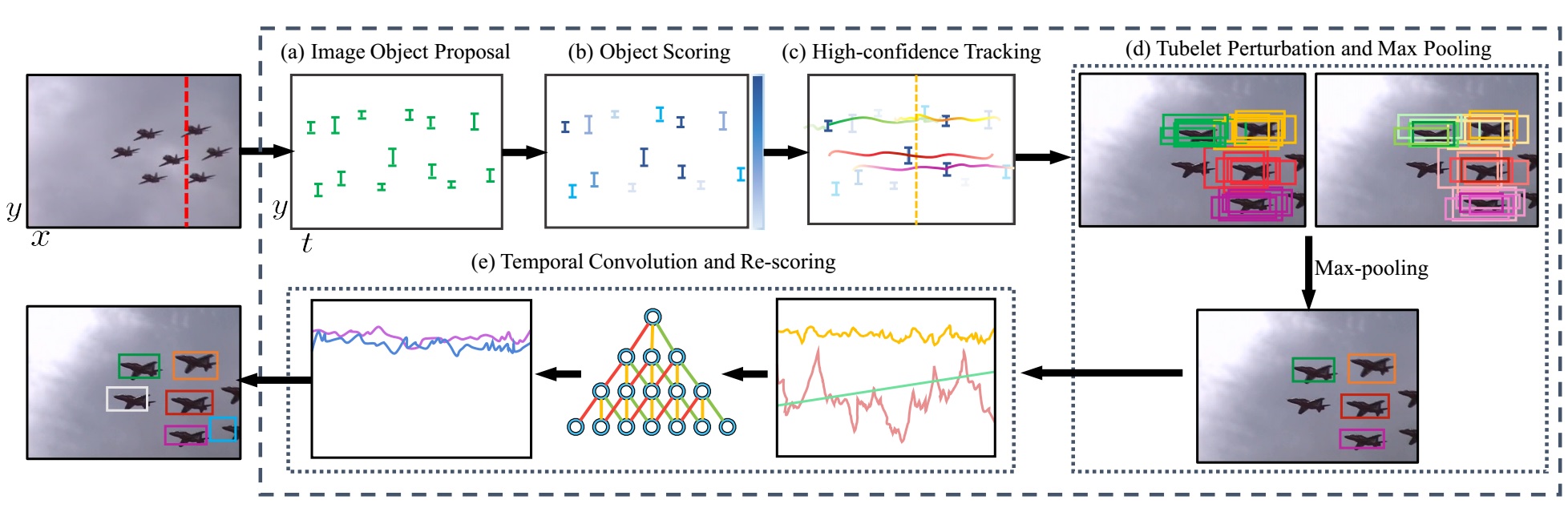

The main steps of the method is shown in the following figure.

- Image object proposal. The regions are generated in each frame by Selective Search and classified by AlexNet of 200 categories. It is a similar method to R-CNN. The region with scores lower than a threshold are remove and the rest are the proposals.

- Obejct proposal scoring. The proposals are scored by a 30-category classifier deprived from GoogleNet. And the proposals with higher scores are kept.

- High-confidence proposal tracking. The proposals with higher scores are tracked and the overlapped proposals are pressed using IOU. The trackes are tubelet proposals.

- Tublet box perturbation and max-pooling. As the tracking result may drift, multiple regions are generated around tubelet proposals. All the regions are sent to the CNN in step 2 and sorted by the scores. Select the region of highest score to replace the one in tubelet.

- Temporal convolution and re-scoring. Temporal Convolutional Network (TCN) is proposed that uses 1-D serial features including detection scores, tracking scores, anchor offsets and generates temporally dense prediction on every tubelet box. The tubelet with high detection score are regarded as detection result. However, TCN has not been well explained in this work

ADVANTAGES

- The TCN help reduce the negative effect caused by the large variations of detection scores along the same track.

DISADVANTAGES

- Too many stages.

- Too many CNN operations.