Miscellaneous [20161129]

那年春节,我倒心血来潮提起了勇气,开始走访一个个小时候玩伴的家。

有的人已经结婚了,抱着孩子,和我讲述他在夜市上摆着的那摊牛肉店的营收。有的当上了渔夫,和我讲话的时候,会不自觉地把自己的身子一直往后退,然后问:“会不会熏到你啊?”有的开起服装厂当上了老板,吃饭的时候一直逼我喝陈酿多少多少年的茅台,然后醉气熏熏地拉着我,中气十足地说:“咱们是兄弟对不对,是兄弟你就别嫌我土,我也不嫌你穷,我们喝酒……”

我才明白,那封信里,我向文展说的“小时候的玩伴真该一起聚聚了”,真是个天真的提议。每个人都已经过上不同的生活,不同的生活让许多人在这个时空里没法相处在共同的状态中,除非等彼此都老了,年迈再次抹去其他,构成我们每个人都重要的标志,或许那时候的聚会才能成真。

—— 蔡崇达《皮囊》

感觉大家都成长了,只有我自己还活在以前的日子里,以为伙伴们都和小时候一样。读到这一段话,突然相当以前一个很尴尬的遭遇,有一个小学时的玩伴小A和我进入了不同的初中,在初二的一次聚会中,跟小A开了一个小学的时候经常开的玩笑,但是小A并没有做出小学时的反应。现在想想,原来在那么早之前我们每个人就都不一样了。

现在,只有那些从小到大一直保持联系,而且教育经历差不多的人同伴才保持着亲密的关系。作为独生子女的一代,或许我们各自都会一些孤僻,而同时又都渴望有一群兄弟姐妹。我们就好像《老友记》里的那六个人,互相成为同龄人里的支柱,大家聚在一起寻开心、吐苦水、分享各自生活里的趣事。然而,逐渐的,好像电视剧结束时,当大家各自组建了家庭,好像也就到了大家需要适当分开的时候了。再没有人想要来一场说走就走的撸串,或许是不想打扰别人的生活,或许是觉得不该就那么脱离家庭。也许,这就是大家感觉我们变了的原因吧。

不知不觉买了十本书,什么方面的都有,期待赶紧把它们都读完。

Reading Note: Fully Convolutional Instance-aware Semantic Segmentation

TITLE: Fully Convolutional Instance-aware Semantic Segmentation

AUTHOR: Yi Li, Haozhi Qi, Jifeng Dai, Xiangyang Ji, Yichen Wei

ASSOCIATION: Microsoft Research Asia, Tsinghua University

FROM: arXiv:1611.07709

CONTRIBUTIONS

An end-to-end fully convolutional approach for instance-aware semantic segmentation is proposed. The underlying convolutional representation and the score maps are fully shared for the mask prediction and classification sub-tasks, via a novel joint formulation with no extra parameters. The network structure is highly integrated and efficient. The per-ROI computation is simple, fast, and does not involve any warping or resizing operations.

METHOD

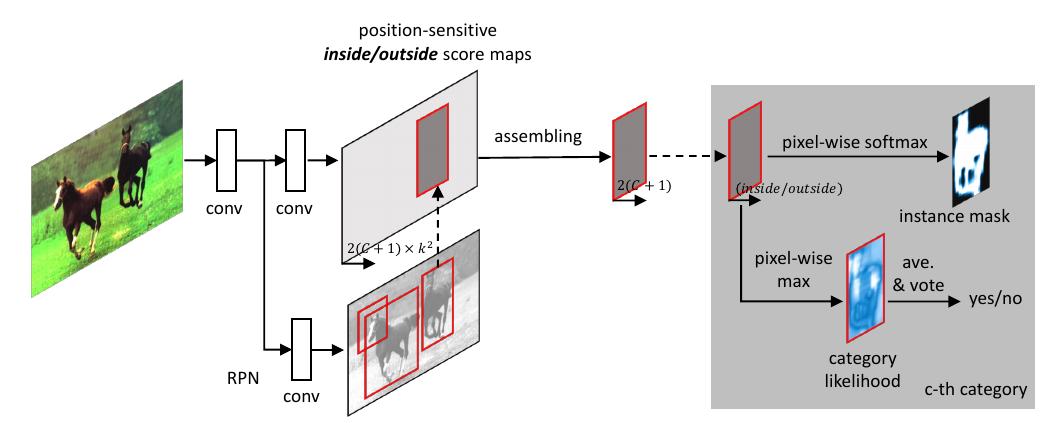

The proposed method is highly related with a previous work or R-FCN. The following figure gives an illustration:

Different from the mentioned previous work, this work predicts two maps, ROI inside map and ROI outside map. The two score maps jointly account for mask prediction and classification sub-tasks. For mask prediction, a softmax operation produces the per-pixel foreground probability. For mask clssification, a max operation produces the per-pixel likelihood of “belonging to the object category”.

For an input image, 300 ROIs with highest scores are generated from RPN. They pass through the bbox regression branch and give rise to another 300 ROIs. For each ROI, its classification scores and foreground mask (in probability) is predicted for all categories. NMS with an IoU threshold is used to filter out highly overlapping ROIs. The remaining ROIs are classified as the categories with highest classification scores. Their foreground masks are obtained by mask voting. For an ROI under consideration, the ROIs (from the 600) are found with IoU scores higher than 0.5. Their foreground masks of the category are averaged on a per-pixel basis, weighted by their classification scores. The averaged mask is binarized as the output.

ADVANTAGES

- End-to-end training and testing alleviate the simplicity of the system.

- Utilizing the idea of R-FCN, its efficiency is proved.

Miscellaneous [20161126]

人世间不会有小说或童话故事那样的结局:“从此,他们永远快快活活地一起过日子。”

人间没有单纯的快乐。快乐总夹带着烦恼和忧虑。

人间也没有永远。我们一生坎坷,暮年才有了一个可以安顿的居处。但老病相催,我们在人生道理上已走到尽头了。

—— 杨绛《我们仨》

这是这本书最后一段话的一部分,很难说这本书是充满快乐的,因为三人中两人的离去,我总有一种忧伤的情绪,然而也不得不说,每每读到这三个人有趣的互动,我的嘴角总会微微上扬。当我深深喜爱一个故事的时候,我会希望这个故事一直继续下去,不需要有美好的结局,只希望它继续下去。所以说,我可能是个追求永远的人,是个怕变老怕死的人。

我们的生命本来多轻盈,都是被这肉体和各种欲望的污浊给拖住。阿太,我记住了。“肉体是拿来用的,不是拿来伺候的。”

—— 蔡崇达《皮囊》

不知道“愉悦”到底来自于什么,可能就像哲学里的“存在”分为“物质的存在”和“精神的存在”,“愉悦”也来自于“物质的愉悦”和“精神的愉悦”,吃一顿大餐和画一幅满意的画,都能给让我快乐。不过不是有那么一句话么,快乐来自于多巴胺,如果这句话是真的,那看来“精神的愉悦”最终也得落于“物质的愉悦”。所以我觉得精神上的造诣永远不能脱离肉体,即使佛教里多少得道高僧都是通过冥想来参透佛法、领悟真理,我还是觉得他们得到的不是真正的真理,因为宗教过于抬高精神了;相对应的,科学又过于专注于观察。两者都是对真理的无限逼近,如果能够真正做到两者的统一,那也许才是真理。

同事的邀约,春节第一天准时上班的人一起吃饭庆祝。那个嘈杂的餐厅,每个人说着春节回家的种种故事:排队两天买到的票、回去后的陌生和不习惯、与父母说不上话的失落和隔阂……然后有人提议说,为了大家共同的遥远的故乡举杯。

我举起杯,心里想着:用尽各种办法让自己快乐吧,你们这群无家可归的孤魂野鬼。

—— 蔡崇达《皮囊》

如果这样就是孤魂野鬼了,那我猜我得算得上一个,而且这个世界上充满了孤魂野鬼。

最近对阅读的热情突然大增,趁着有动力,赶紧买了小三百的闲书。特别喜欢读别人的故事,看电影也是,特别喜欢一个人成长的故事,哪怕这个故事平平淡淡的,看过之后也觉得自己多了一种阅历。读着读着就有一些想法,小记于此吧。

Reading Note: Densely Connected Convolutional Networks

TITLE: Densely Connected Convolutional Networks

AUTHOR: Gao Huang, Zhuang Liu, Kilian Q. Weinberger

ASSOCIATION: Cornell University, Tsinghua University

FROM: arXiv:1608.06993

CONTRIBUTIONS

Dense Convolutional Network (DenseNet) is proposed, which embraces the observation that networks can be substantially deeper, more accurate and efficient to train if they contain shorter connections between layers close to the input and those close to the output.

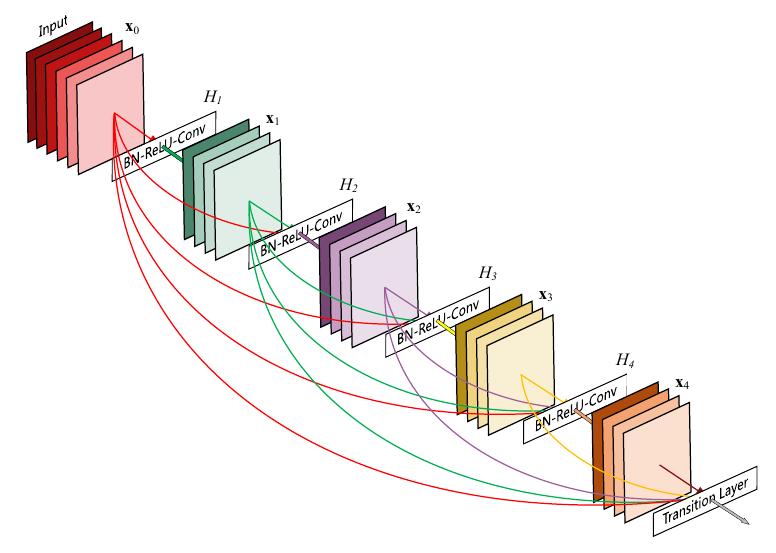

METHOD

DenseNet is a network architecture where each layer is directly connected to every other layer in a feed-forward fashion (within each dense block). For each layer, the feature maps of all preceding layers are treated as separate inputs whereas its own feature maps are passed on as inputs to all subsequent layers. The idea can be illustrated as the following figure:

SOME IDEAS

In the work of Yoshua Bengio’s Understanding intermediate layers using linear classifier probes, the author claims that the raw input is helpful at the beginning of the training of the network. So maybe the dense connection plays similar role in this work.

Using Caffe to implement DenseNet, large memory is of need because of the large number of split layers.

Reading Note: LCNN: Lookup-based Convolutional Neural Network

TITLE: LCNN: Lookup-based Convolutional Neural Network

AUTHOR: Hessam Bagherinezhad, Mohammad Rastegari, Ali Farhadi

ASSOCIATION: University of Washington, Allen Institute for AI

FROM: arXiv:1611.06473

CONTRIBUTIONS

LCNN, a lookup-based convolutional neural network is introduced that encodes convolutions by few lookups to a dictionary that is trained to cover the space of weights in CNNs.

METHOD

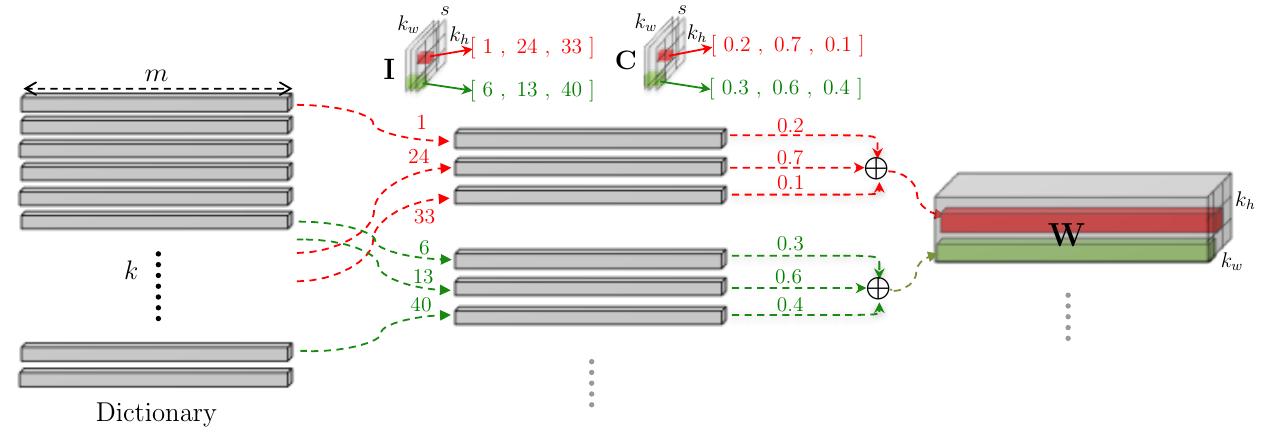

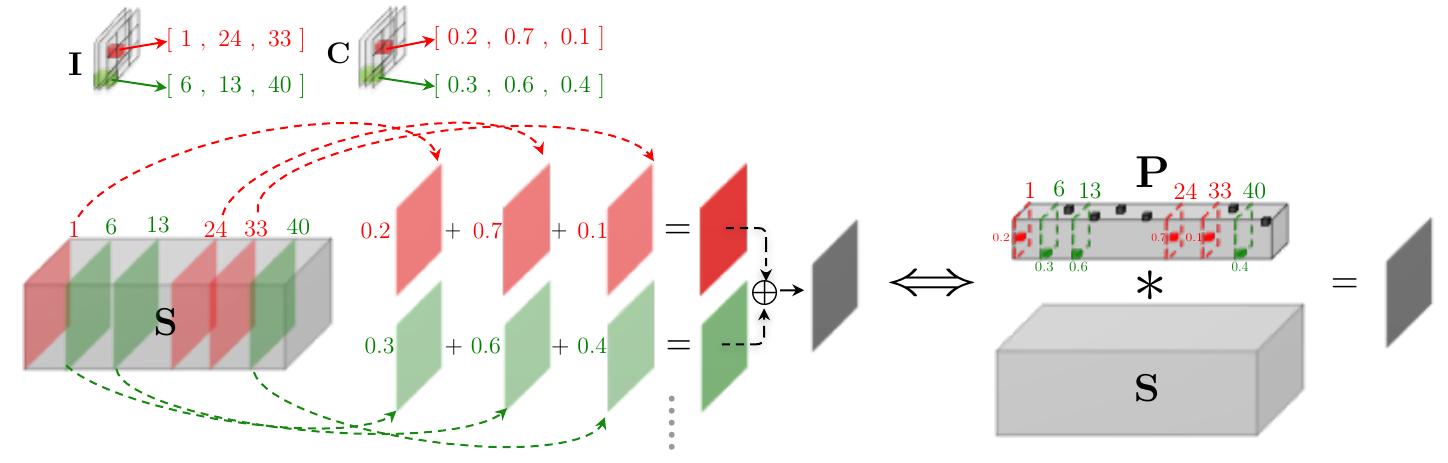

The main idea of the work is decoding the weights of the convolutional layer using a dictionary $D$ and two tensors, $I$ and $C$, like the following figure illustrated.

where $k$ is the size of the dictionary $D$, $m$ is the size of input channel. The weight tensor can be constructed by the linear combination of $S$ words in dictionary $D$ as follows:

where $S$ is the size of number of components in the linear combinations. Then the convolution can be computed fast using a shared dictionary. we can convolve the input with all of the dictionary vectors, and then compute the output according to $I$ and $C$. Since the dictionary $D$ is shared among all weight filters in a layer, we can precompute the convolution between the input tensor $\textbf{X}$ and all the dictionary vectors. Given $\textbf{S}$ which is defined as:

the convolution operation can be computed as

where $\textbf{P}$ can be expressed by $I$ and $C$:

The idea can be illustrated in the following figure:

thus the the dictionary and the lookup parameters can be trained jointly.

ADVANTAGES

- It speeds up inference.

- Few-shot learning. The shared dictionary in LCNN allows a neural network to learn from very few training examples on novel categories

- LCNN needs fewer iteration to train.

DISADVANTAGES

- Performance is hurt because of the estimation of the weights

Miscellaneous [20161122]

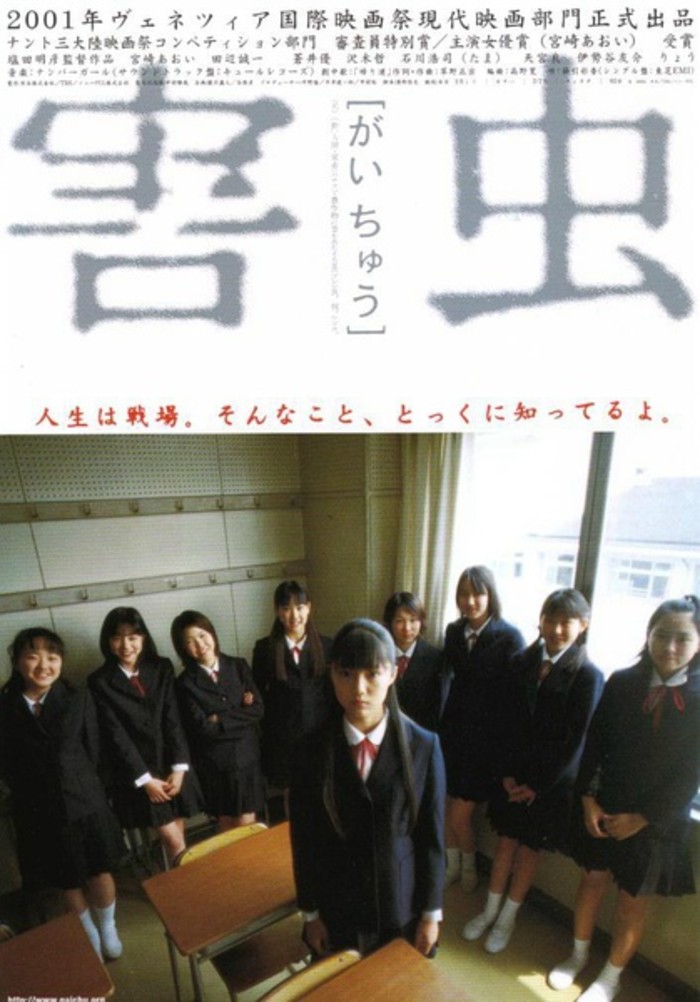

看了一部特别压抑的日本电影——《害虫》,由盐田明彦导演,宫崎葵主演。当时16岁的小葵评价这部影片赢得了2001年法国南特三大陆电影节影后。

影片的故事主角是一个边缘少女幸子,13岁的幸子与小学时的班主任绪方恋爱,而幸子的母亲对其不闻不问。为了逃避在学校里来自同学的指指点点,幸子整日逃学在街上闲逛打发时间。她和街头流浪汉成了朋友。后来,幸子又返回了学校,虽然生活看似有所好转,但是她差点被母亲的情人强奸,这一切又迫使幸子离开了学校,到绪方工作的发电厂找寻昔日的恋人。然而幸子在两人约定的小茶店里等了很久,绪方还是没有出现。灰心的幸子跟随一个前来搭讪的男青年离开了茶店,在停车场,她看到了匆匆赶来的绪方,却还是坐上了那个陌生男子的车。

很难想象宫崎葵在十六岁的年纪就有了这样令人叹服的演技,相信童星出身的小葵有着十分幸福的童年,在这样一部令人窒息的影片里。整部电影的台词极少,有些时候给人的感觉甚至好像默片,然而小葵通过到位的表演完美地展现了一个边缘少女的内心世界,绝望、压抑、叛逆、渴望被爱……

影片中的一些对比在给人一丝希望的同时,也让人感到无比痛心。

幸子和流浪汉在大马路上踢易拉罐,此时幸子的笑声充满了一个少女该有的纯净和无忧无虑。然而这一切发生在空无一人的大马路上,别的同学都在学校里,而幸子却和流浪汉在一起。欢快的笑声与空旷马路上的寂静,互相衬托出对方的愉悦与寂寥。

幸子返回学校后,在文化祭中为班级合唱弹奏钢琴伴奏,甚至获得了一个男生的青睐,当他们轻轻地吻在一起的时候,我们相信幸子开始放下警戒的面具,准备真正回到一个初中女生该有的生活轨道,幸子的眼神开始变得温柔。然而,当强奸未遂的流言散布于学校,面对男生的诘问,幸子的表现令人意外得冷静,她只是用教室的椅子把一列桌椅装乱,此时幸子的眼神里是无尽的冷漠。

幸子最灿烂的笑容是给精神有问题的流浪汉递燃烧瓶的时候,很难说这是不是一种心理扭曲的笑容,但是这笑容足以融化每个人内心。这笑容转瞬却被惊恐吞噬,她不断后退,直到退出了镜头。

这电影实在太压抑了,看完之后的一天都觉得心情很糟糕,我在想幸子后来怎么样了,那个时候的日本社会是什么样子的呢,为什么会让一个初中女生陷入如此令人绝望的境遇。甚至我都想去找一本有关日本历史的书,来探寻一下这些疑问。或者再找一部宫崎葵的电影,一部多多展示小葵笑容的电影,让自己的心情愉悦起来。

Reading Note: Fused DNN: A deep neural network fusion approach to fast and robust pedestrian detection

TITLE: Fused DNN: A deep neural network fusion approach to fast and robust pedestrian detection

AUTHOR: Xianzhi Du, Mostafa El-Khamy, Jungwon Lee, Larry S. Davis

ASSOCIATION: University of Maryland, Samsung Electronics

FROM: arXiv:1610.03466

CONTRIBUTIONS

A deep neural network fusion architecture is proposed to address the pedestrian detection problem, called Fused Deep Neural Network (F-DNN).

METHOD

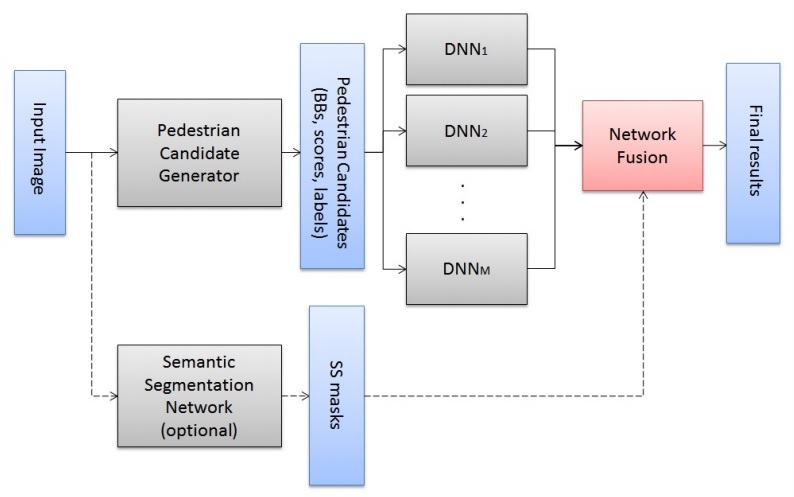

The proposed network architecture consists of a pedestrian candidate generator, a classification network, and a pixel-wise semantic segmentation network. The pipeline of the proposed network fusion architecture is shown in the following figure:

Pedestrian Candidate Generator is implemented by SSD. It provides a large pool of pedestrian candidates varying in scales and aspect ratios. Pedestrian candidates generated should cover almost all the ground truth pedestrians, even though many false positives are introduced at the same time.

Classification Network consists of multiple binary classification deep neural networks which are trained on the pedestrian candidates from Pedestrian Candidate Generator.

Soft-rejection based DNN Fusion works as follows: Consider one pedestrian candidate and one classifier. If the classifier has high confidence about the candidate, we boost its original score from the candidate generator by multiplying with a confidence scaling factor greater than one. Otherwise, we decrease its score by a scaling factor less than one. To fuse all $M$ classifiers, the candidate’s original confidence score is multiplied with the product of the confidence scaling factors from all classifiers in the classification network.

where

and $a{c}$ and $b{c}$ are chosen as 0.7 and 0.1 by cross validation.

Pixel-wise Semantic Segmentation Network is trained to get a binary map. DegreeDgreee to which each candidate’s BB overlaps with the pedestrian category in the SS activation mask gives a measure of the confidence of the SS network in the candidate generator’s results. If the generation pixels occupy at least 20% of the candidate BB area, its score is kept unaltered; Otherw, SNF is applied to scale the original confidence scores.

SOME IDEAS

The idea of the work is simple. It seems a very tricky implementation of pedestrian detection. Though the author claims that it is efficient, it is hard to say how efficient it is using very complex cnn classifiers.

Miscellaneous [20161120]

这个周末的主题是有村架纯和宫崎葵。看了有村参演的《垫底辣妹》、《请叫我英雄》和《闪烁的爱情》,宫崎参演的《如果猫从世界上消失了》、《编舟记》、《黄色大象》、《丈夫得了抑郁症》、《少年手指虎》,还重温了一下《NANA》。

有村架纯算是日本新生代的女演员了,1993年出生,第一次听说这个女演员是在一个影评节目中,其中的主持人对她赞誉有加。也没有特意去关注这个女演员,所以直到这个周末看了《垫底辣妹》。这部电影中有村以辣妹形象登场,最终以邻家女孩的形象谢幕,最初也并没有特别的感觉,后来发现原来是一个长相很甜美的女演员,特别符合自己的审美。随后就搜了搜有村架纯参演的电影,可能是因为太年轻,主要都是学生妹的形象,希望以后可以有更多的戏路。

对于宫崎葵就比较熟悉了,第一次知道宫崎葵好像还是上高中,从时间上看应该是先看了《NANA》,不过总觉得第一次知道宫崎葵是因为她和李准基主演的《初雪》。宫崎葵应该不算一眼就让人惊艳的女演员,是越看越好看的类型,而且笑容十分有感染力,森女气质浓厚。earth music&ecology找来宫崎葵做代言可谓相当合适。后来又看了大河剧《笃姬》,不知道是不是宫崎葵总是出演性格相似的角色,总之是一个自带可爱光环的女演员。总感觉宫崎葵是常年处于少女时期,所以很难想象2007年宫崎葵出演的《初雪》上映,而且在同一年她与高冈苍甫结婚。直到2011年两人离婚,感觉宫崎葵出演的角色也都属于少女类型。或许成熟的宫崎葵就好像和离婚同年上映的《丈夫得了抑郁症》里的样子,虽然成熟,但还是自带少女可爱属性。不过这一切都很难说,宫崎葵没有注册任何社交平台,所以基本看不到什么个人生活的曝光,对于宫崎葵真实的样子,可能只能从一些与她合作的演员的评价中得知一二。《平成电影的日本女优》:宫崎葵外貌清纯秀美,天真可爱,能演复杂的内心戏,是一位极具塑造性的天才演员。Ethan Laundry:她也是一位美丽、优雅的女性。苍井优:她总是一副很开心的样子,生活中她也很爱笑,而且她笑起来的时候能够感染到别人。中岛美嘉:就演戏来说宫崎葵是我的大前辈,觉得她很厉害,从她身上学到不少演戏的技巧,见识到与自己与众不同的艺人的实力。李准基:事实上宫崎葵是入戏到会让我觉得她是不是真的喜欢我的那种演员,听到结婚消息的时候蒙了,真的有一种郁闷的小情绪。大竹忍:小葵是个既可爱、又有主见的女孩子,作为一名演员来讲,她又是十分努力刻苦,这一切都看在我的眼里。堺雅人:我和小葵每次拍戏的时候都会交出属于自己的“答案”。作为一名演员来说,小葵真的是我最棒的伴侣。小葵是那种能让人非常心安的女演员。特别这次扮演的是忧郁症患者,必须要让自己变得不安。不知道用语言该怎么表达,和小葵一起,能让我非常安心的“变得不安”。和三年前拍《笃姬》时一样,这次又让小葵背我抱我。大家一定都最想看到小葵扮演的晴子跨越障碍的那一刻。这个故事其实并不特殊,看过电影的人会觉得女演员宫崎葵其实也是个和我们一样的普通人啊,这才是影片最有趣的地方。能做到这一点的女演员,其实并不多。西田敏行:(拍摄期间)是那种因为有喜欢的女孩子,所以去学校也变得很期待的心情。伊藤淳史:就像一直思念着(宫崎葵饰演的蝴蝶)的伊作一样,淳史也一直想着小葵。

日本电影有一种特别的气质,而且很奇特,给我的感受是:节奏好慢,看着好像有点无聊,但是看起来停不下来,不知不觉间两个小时的电影就结束了。看完之后会稍稍有点“我两个小时居然就看了这么一个小故事”,不过治愈感还是很强。就这看的几部电影而言,印象最深刻的应该是《请叫我英雄》、《编舟记》和《丈夫得了抑郁症》,应该都算是“一个人坚持自我”这一主题。另外看了《NANA》中的宫崎葵和松田龙平,再看《编舟记》中的两人,有一种微妙感觉,2007年的《NANA》到2013年的《编舟记》,好像轻狂少年成长为社会的主力担当一样。所谓事业也应该好像编纂《大渡海》的编辑们一样吧,一生只为这一件事而来。

Little Things [20161118]

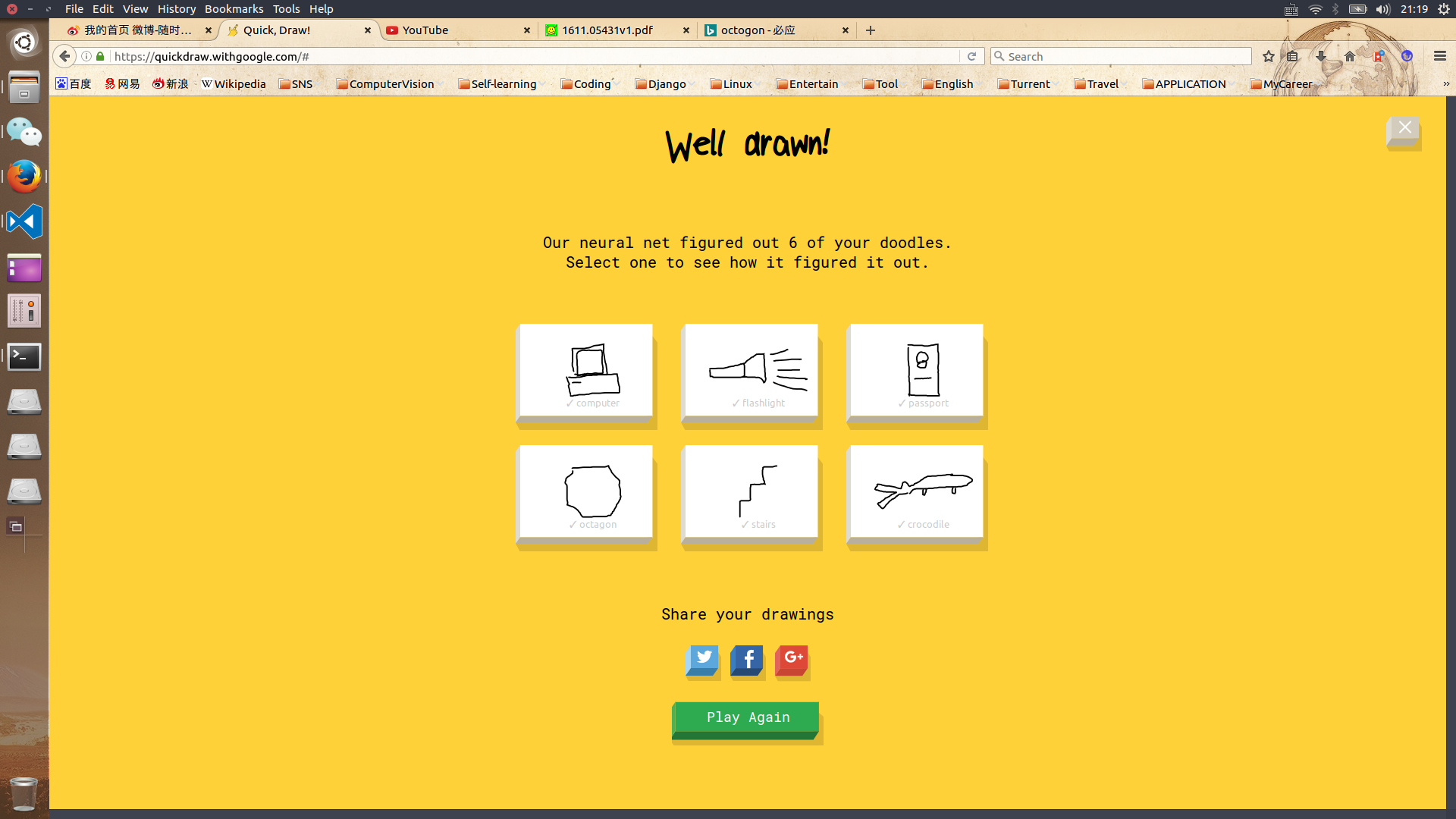

I’ve just tried a very interesting and fun website called Quick, Draw!, which is developed by Google Creative Lab and Data Arts Team. This is a game built with machine learning. The user draws, and a neural network tries to guess what the user is drawing. It is really fun.