TITLE: Tiny SSD: A Tiny Single-shot Detection Deep Convolutional Neural Network for Real-time Embedded Object Detection

AUTHOR: Alexander Wong, Mohammad Javad Shafiee, Francis Li, Brendan Chwyl

ASSOCIATION: University of Waterloo, DarwinAI

FROM: arXiv:1802.06488

CONTRIBUTION

- A single-shot detection deep convolutional neural network, Tiny SSD, is designed specifically for real-time embedded object detection.

- A non-uniform Fire module is proposed based on SqueezeNet.

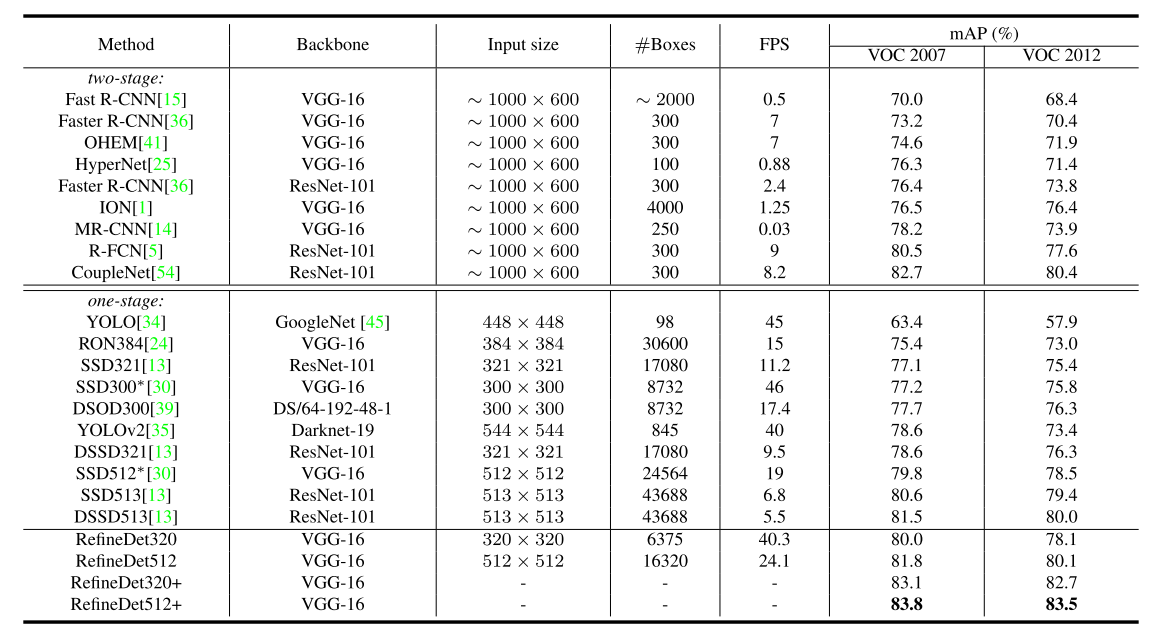

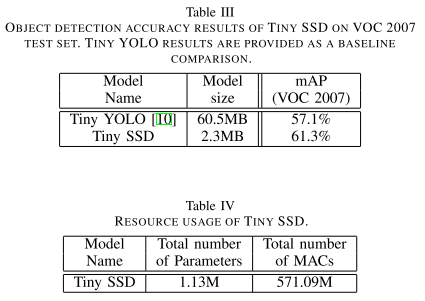

- The network achieves 61.3% mAP in VOC2007 dataset with a model size of 2.3MB.

METHOD

DESIGN STRATEGIES

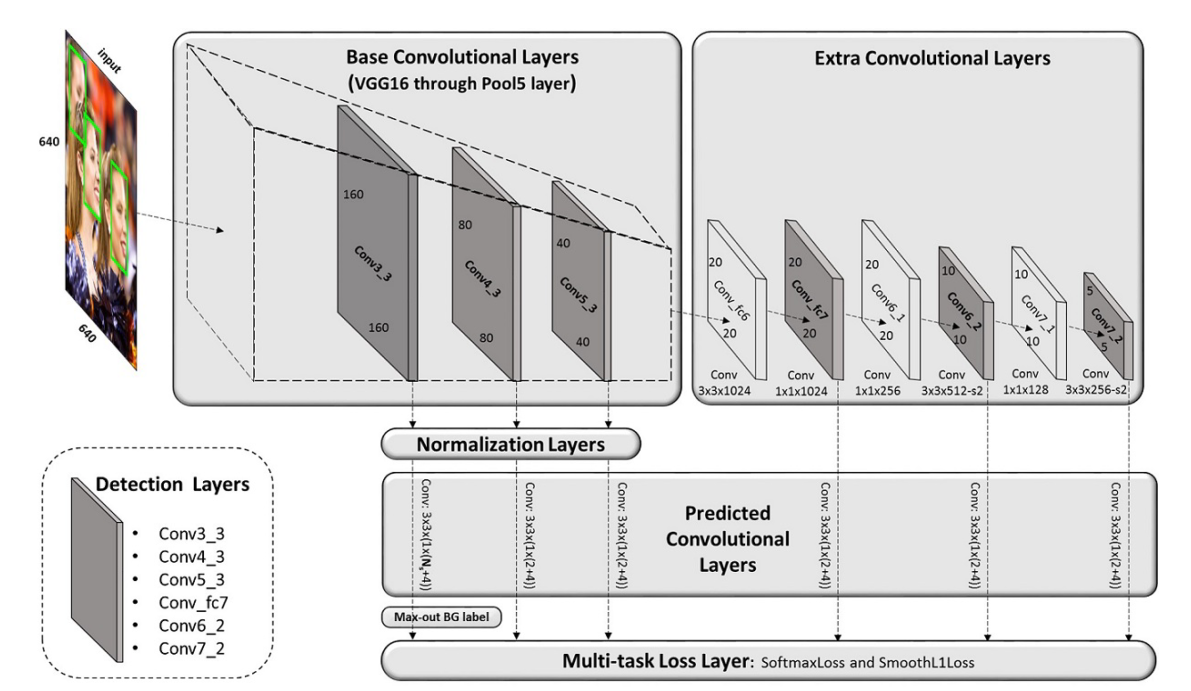

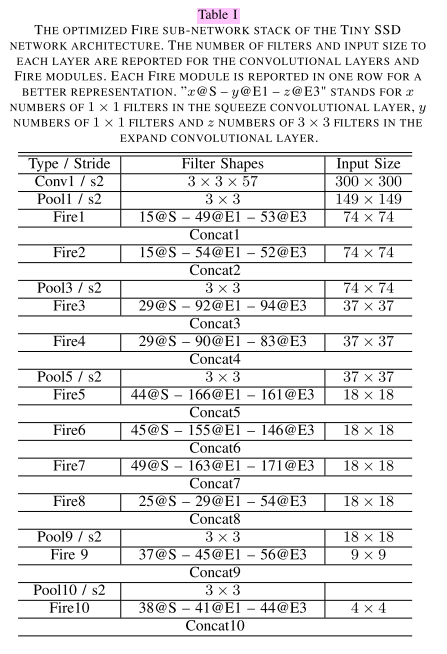

Tiny SSD network for real-time embedded object detection is composed of two main sub-network stacks:

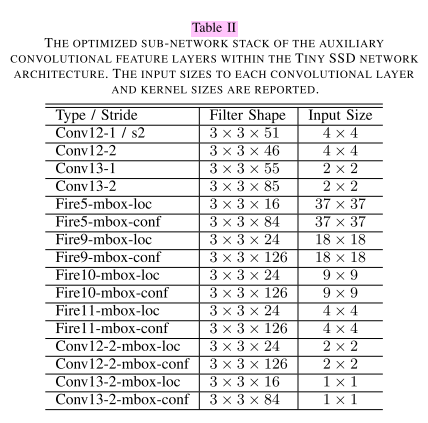

- A non-uniform Fire sub-network stack.

- A non-uniform sub-network stack of highly optimized SSD-based auxiliary convolutional feature layers.

The first sub-network stack is feed into the second sub-network stack. Both sub-networks needs carefully design to run on an embedded device. The first sub-network works as the backbone, which directly affect the performance of object detection. The second sub-network should balance the performance and model size as well as inference speed.

Three key design strategies are:

- Reduce the number of $3 \times 3$ filters as much as possible.

- Reduce the number of input channels to $3 \times 3$ filters where possible.

- Perform downsampling at a later stage in the network.

NETWORK STRUCTURE

PERFORMANCE

SOME THOUGHTS

The paper uses half precision floating-point to store the model, which reduce the model size by half. From my own expirence, several methods can be tried to export a deep learning model to embedded devices, including

- Architecture design, just like this work illustrated.

- Model pruning, such as decomposition, filter pruning and connection pruning.

- BLAS library optimization.

- Algorithm optimization. Using SSD as an example, the Prior-Box layer needs only one forward as long as the input image size does not change.