The traffic jam in Beijing :(

The traffic jam in Beijing :(

TITLE: Deformable Part-based Fully Convolutional Network for Object Detection

AUTHOR: Taylor Mordan, Nicolas Thome, Matthieu Cord, Gilles Henaff

FROM: arXiv:1707.06175

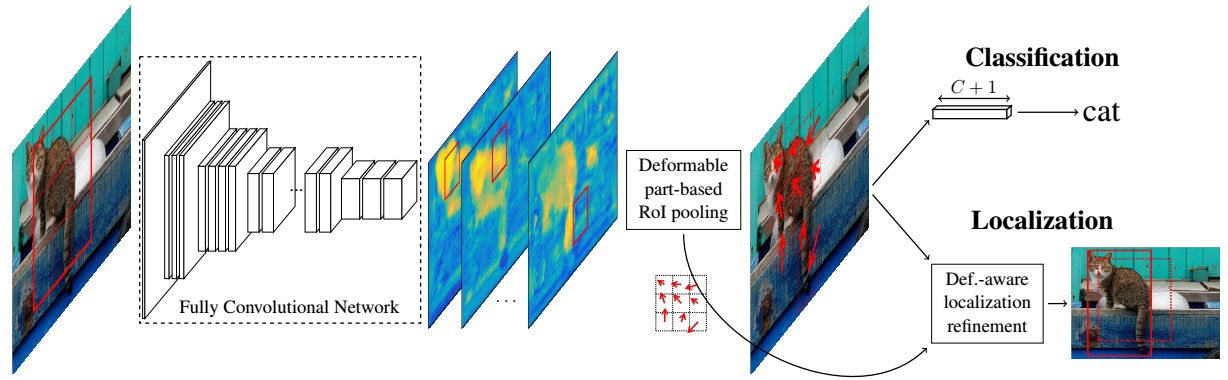

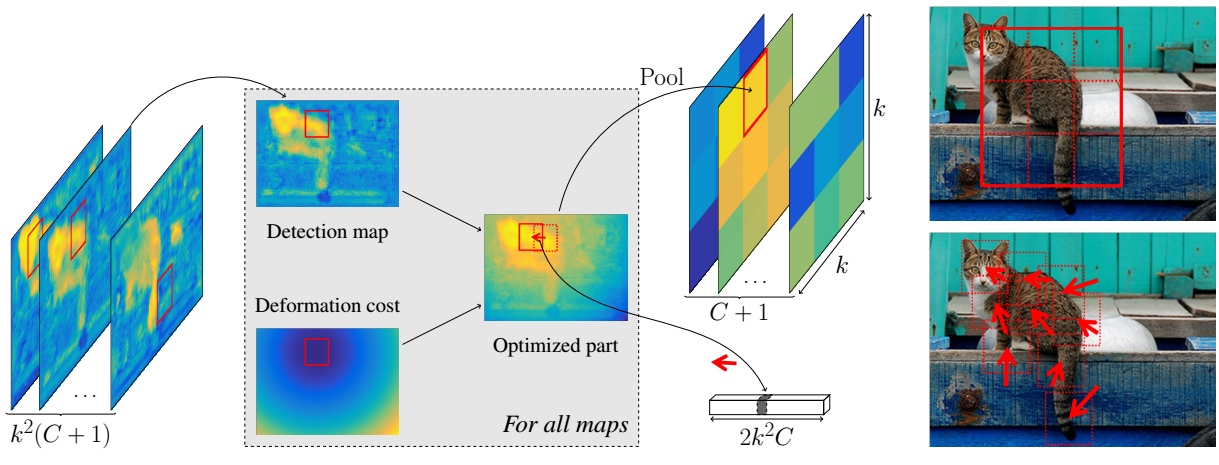

R-FCN is the work closest to DP-FCN. Both are developed on the basis of Faster-RCNN, in which an RPN is used to generate object proposals and a designed pooling layer is used to extract features for classification and localization. The architecture of DP-FCN is illustrated in the following figure. A Deformable part-based RoI Pooling layer follows a FCN network. Then two branches predict category and location respectively. The output of the backbone FCN is similar to that in R-FCN. It has $ k^2(C+1) $ channels corresponding to $ k \times k $ parts and $ C $ categories and background.

For each input channel, just like what has been done in DPM, a transformation is carried out to spread high responses to nearby locations, taking into account the deformation costs.

In my understanding, the output of RPN works like the root filter in DPM. Then the region proposal is evenly divided into $ k \times k $ sub-regions. Then these sub-regions will displace taking deformation into account. Displacement computed during the forward pass are stored and used to backpropagate gradients at the same locations.

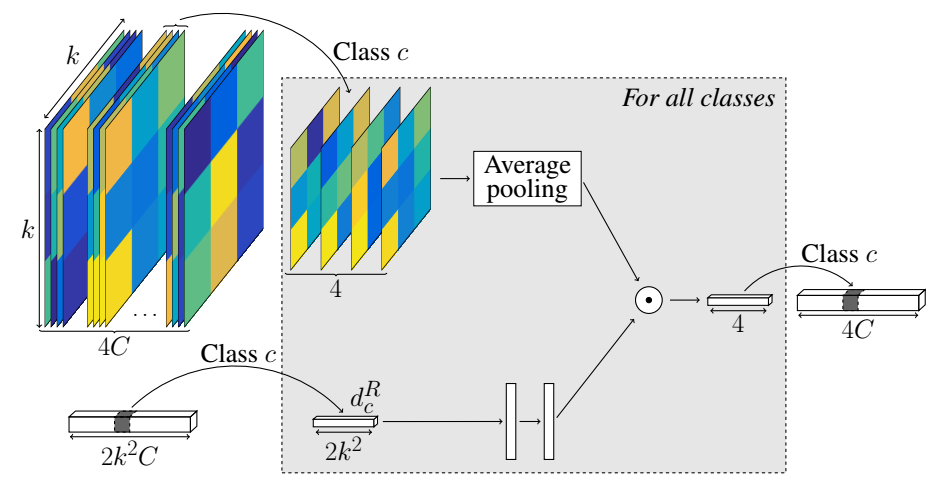

Predictions are performed with two sibling branches for classification and relocalization of region proposals as is common practice. The classification branch is simply composed of an average pooling followed by a SoftMax layer.

As for location prediction, every part has 4 elements to be predicted. In addition to that, the displacement is sent to two fully connected layers and is then element-wise multiplied with the first values to yield the final localization output for this class.

Stay focused, go after your dreams and keep moving toward your goals.

LL Cool J

TITLE: ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices

AUTHOR: Xiangyu Zhang, Xinyu Zhou, Mengxiao Lin, Jian Sun

ASSOCIATION: Megvii Inc (Face++)

FROM: arXiv:1707.01083

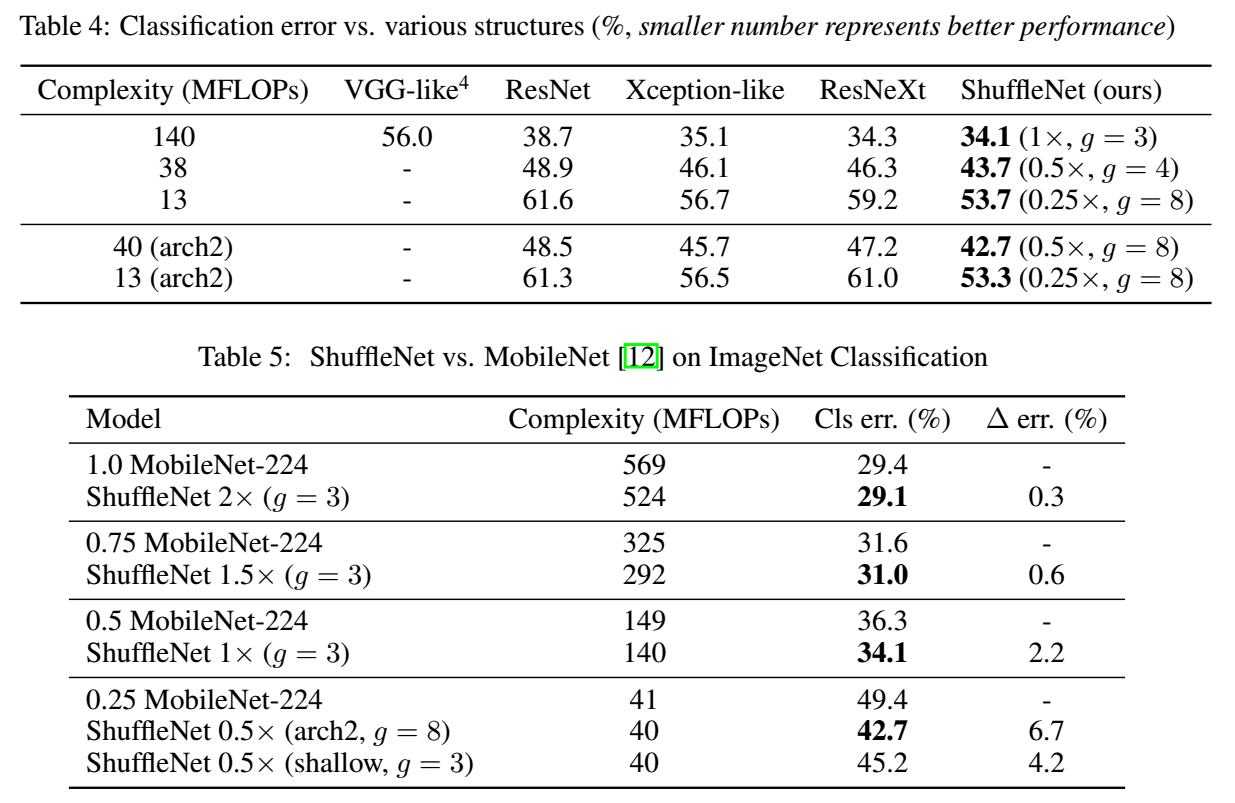

In MobileNet and other works, efficient depthwise separable convolutions or group convolutions strike an excellent trade-off between representation capability and computational cost. However, both designs do not fully take the $ 1 \times 1 $ convolutions (also called pointwise convolutions in MobileNet) into account, which require considerable complexity.

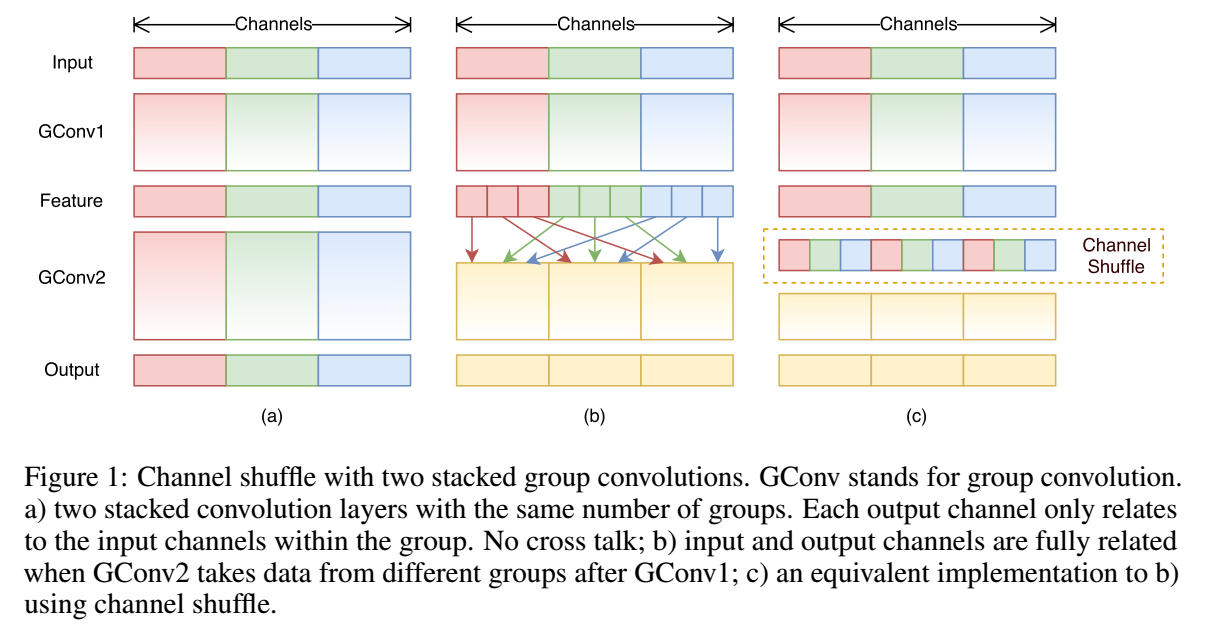

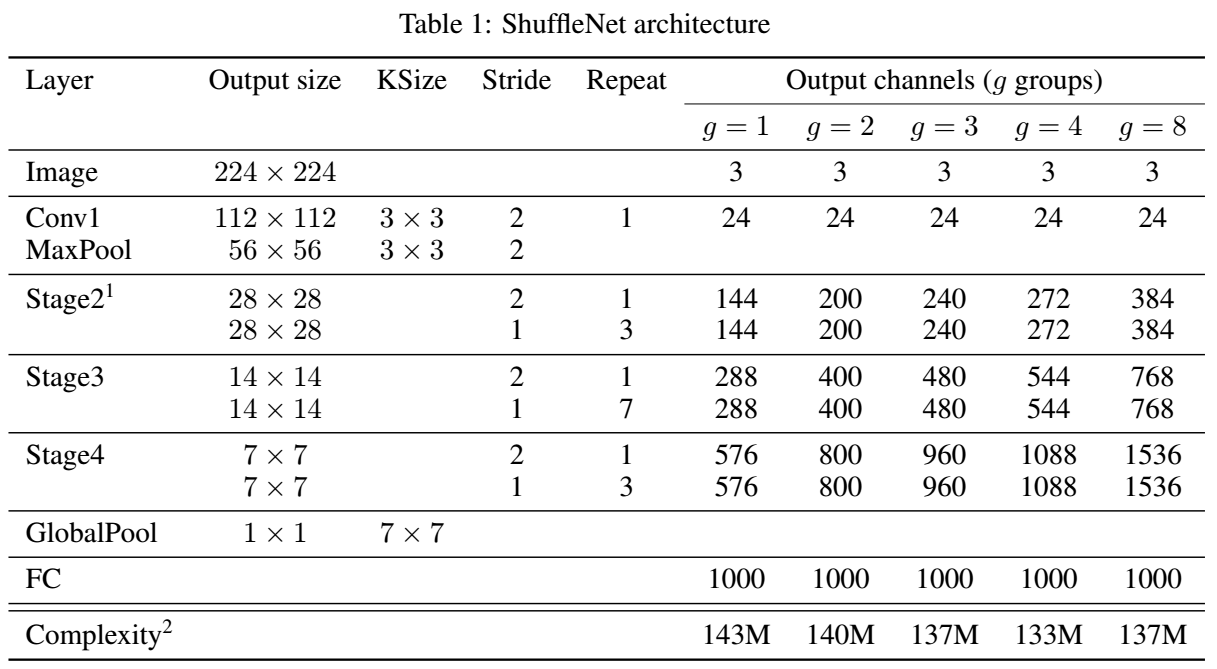

In order to address the mentioned issue, a straightforward solution is applying group convolutions on $ 1 \times 1 $ layers like what has been done on $ 3 \times 3 $ in MobileNet. However, if multiple group convolutions stack together, there is one side effect: outputs from a certain channel are only derived from a small fraction of input channels. This property blocks information flow between channel groups and weakens representation. To allow group convolution obtaining input data from different groups, for the feature map generated from the previous group layer, we can first divide the channels in each group into several subgroups, then feed each group in the next layer with different subgroups. It can be implemented by reshaping the previous output channel dimension into $ (g, n) $, transposing and then flattening it back as the input of next layer, which is called channel shuffle operation and illustrated in the following figure.

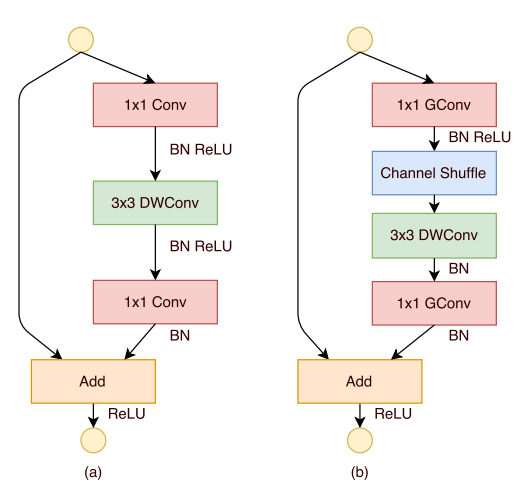

The following figure shows the ShuffleNet Unit.

In the figure, (a) is the building block in ResNeXt, and (b) is the building block in ShuffleNet. Given the input size $ c \times h \times w $ and the bottleneck channels $ m $, ResNext has $ hw(2cm+9m^2/g) $ FLOPs, while ShuffleNet needs $ hw(2cm/g+9m) $ FLOPs.

TITLE: MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications

AUTHOR: Andrew G. Howard, Menglong Zhu, Bo Chen, Dmitry Kalenichenko, Weijun Wang, Tobias Weyand, Marco Andreetto, Hartwig Adam

ASSOCIATION: Google

FROM: arXiv:1704.04861

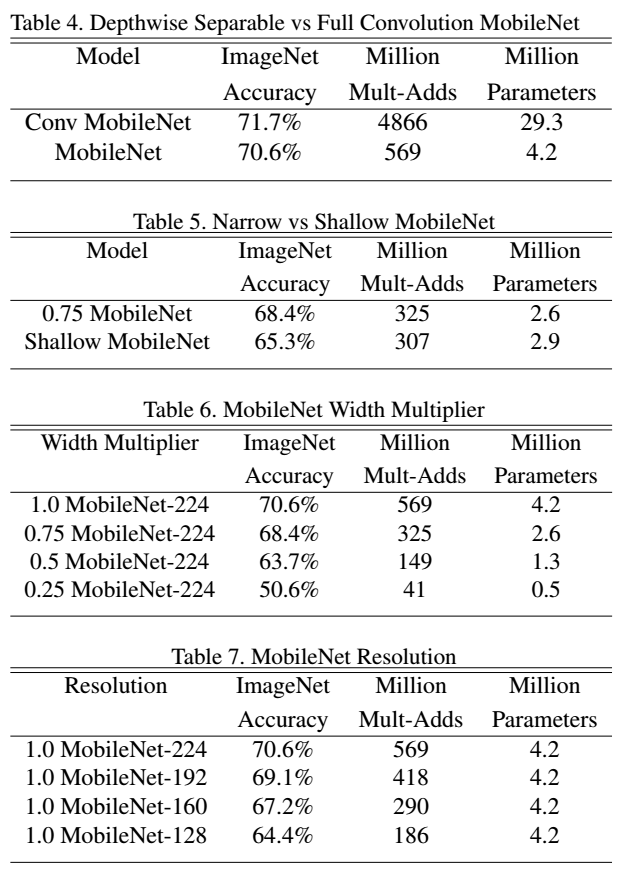

The core layer of MobileNet is depthwise separable filters, named as Depthwise Separable Convolution. The network structure is another factor to boost the performance. Finally, the width and resolution can be tuned to trade off between latency and accuracy.

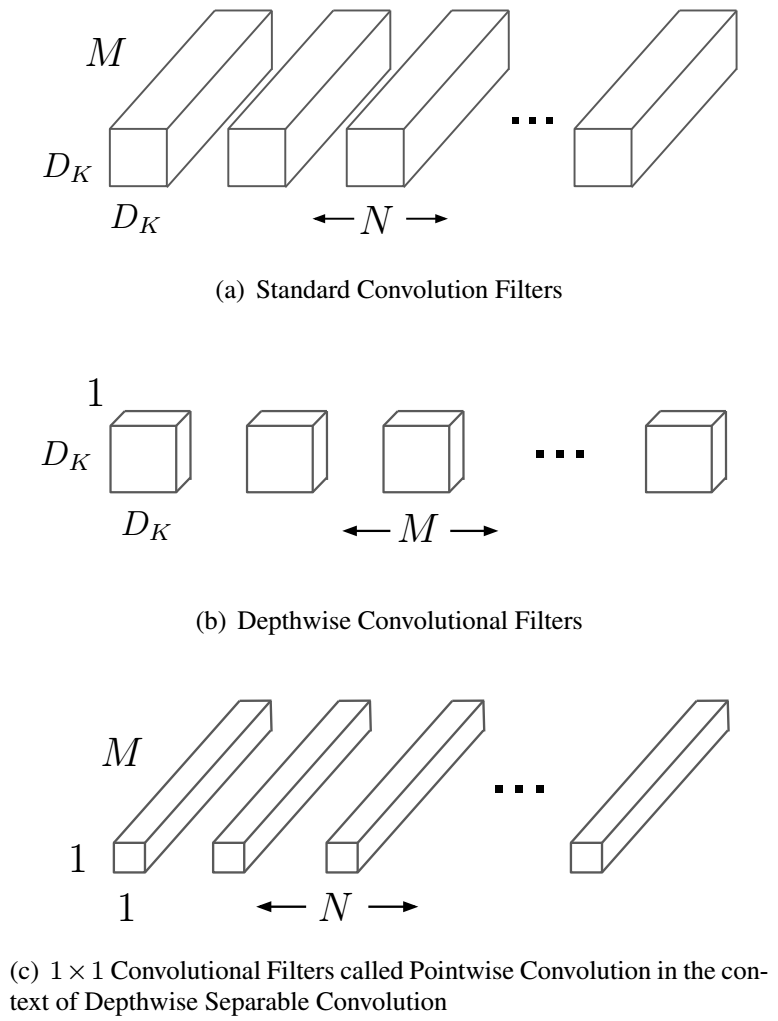

Depthwise separable convolutions which is a form of factorized convolutions which factorize a standard convolution into a depthwise convolution and a $1 \times 1$ convolution called a pointwise convolution. In MobileNet, the depthwise convolution applies a single filter to each input channel. The pointwise convolution then applies a $ 1 \times 1 $ convolution to combine the outputs the depthwise convolution. The following figure illustrates the difference between standard convolution and depthwise separable convolution.

The standard convolution has the computation cost of

Depthwise separable convolution costs

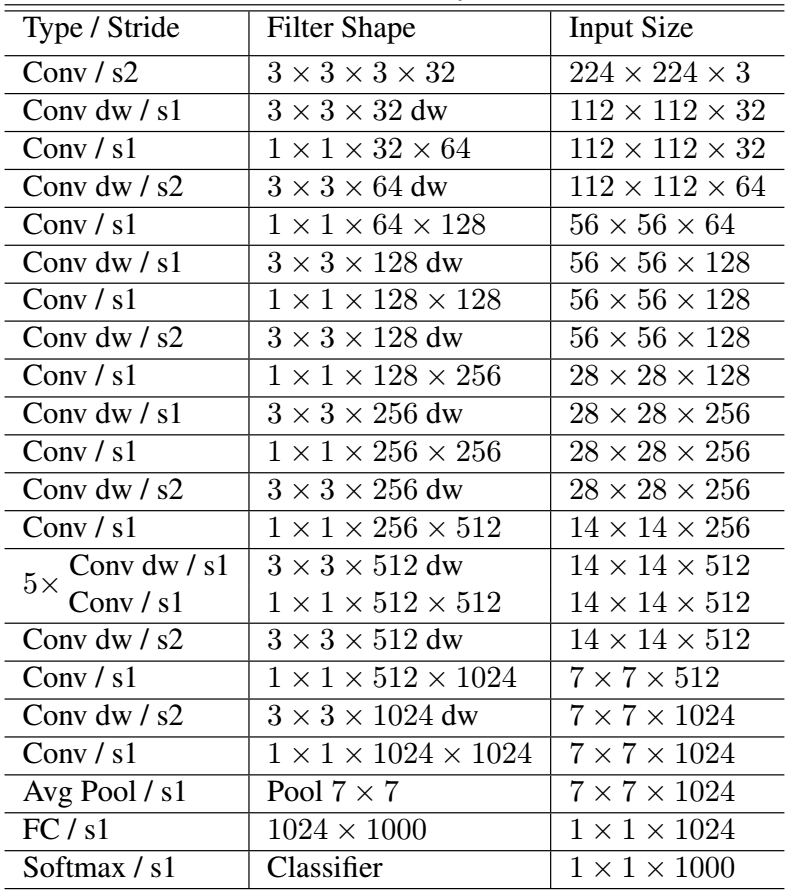

The following table shows the structure of MobileNet

The Width Multiplier is used to reduce the number of the channels. The Resolution Multiplier is used to reduce the input image of the network.

This weekend was so hot that everyone seems to be bad tempered and have difficulty to breathe. However, I helped myself to feel the beauty of life by cooking myself food and going out to watch a movie. Recently, I’ve been always hiding in a room with air-conditioning, feeling bored to almost anything. Perhaps, when it is hot, it is time to sweat. Sweating made me loose much pressure and feel re-freshed. The cold udon noodles and bolognese calmed me down and gave me energy.

TITLE: Optimizing Deep CNN-Based Queries over Video Streams at Scale

AUTHOR: Daniel Kang, John Emmons, Firas Abuzaid, Peter Bailis, Matei Zaharia

ASSOCIATION: Stanford InfoLab

FROM: arXiv:1703.02529

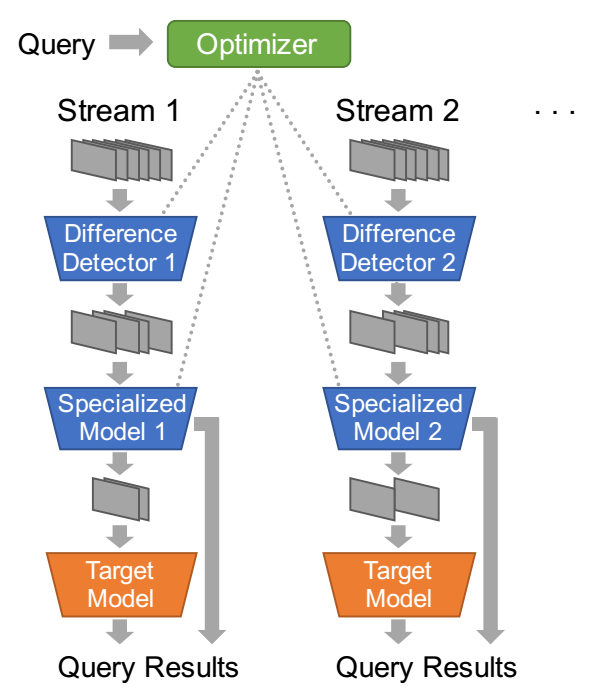

The work flow of NoScope can be viewed in the following figure. Brefiely, it can be explained that NoScope’s optimizer selects a different configuration of difference detectors and specialized models for each video stream to perform binary classification as quickly as possible without calling the full target CNN, which will be called only when necessary.

There are mainly three compoments in this system, Difference Detectors, Specialized Models and Cost-based Optimizer.

TITLE: Learning Spatial Regularization with Image-level Supervisions for Multi-label Image Classification

AUTHOR: Feng Zhu, Hongsheng Li, Wanli Ouyang, Nenghai Yu, Xiaogang Wang

ASSOCIATION: University of Science and Technology of China, University of Sydney, The Chinese University of Hong Kong

FROM: arXiv:1702.05891

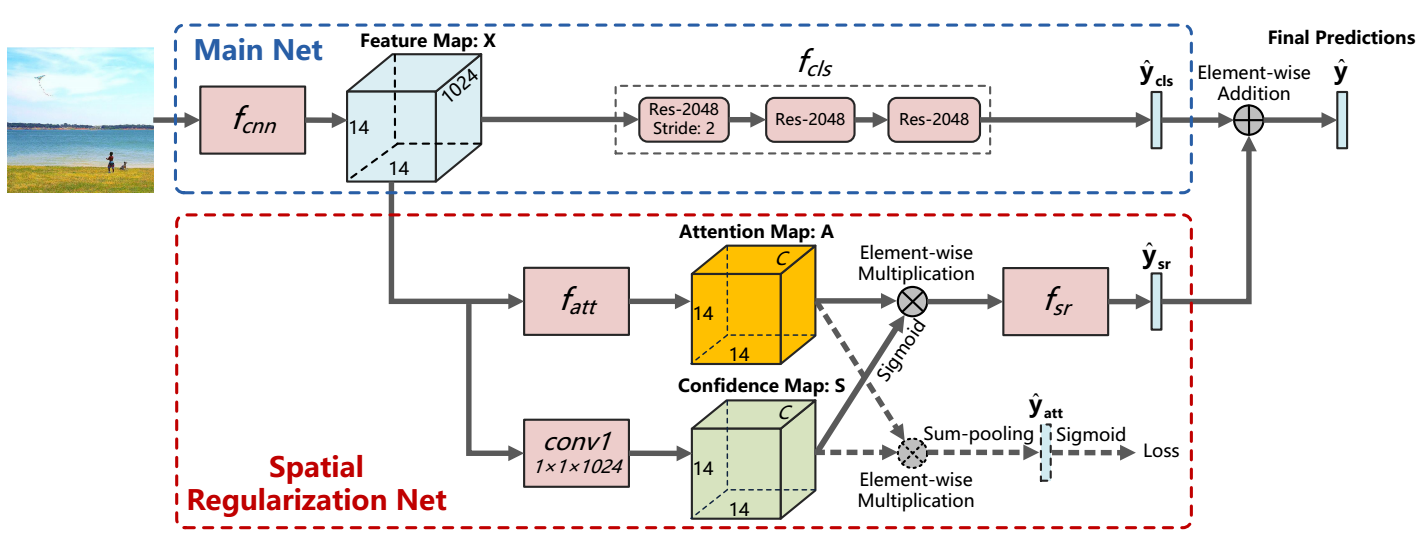

The proposed Spatial Regularization Net (SRN) takes visual features from the main net as inputs and learns to regularize spatial relations between labels. Such relations are exploited based on the learned attention maps for the multiple labels. Label confidences from both main net and SRN are aggregated to generate final classification confidences. The whole network is a unified framework and is trained in an end-to-end manner.

The scheme of SRN is illustrated in the following figure.

To train the network,

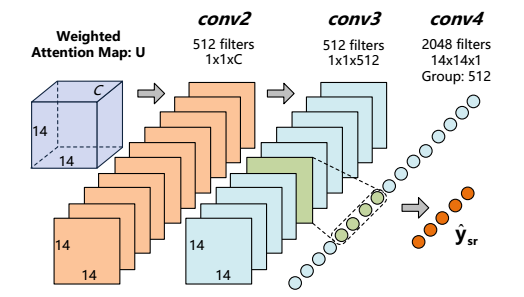

The main network follows the structure of ResNet-101. And it is finetuned on the target dataset. The output of Attention Map and Confidence Map has $ C $ channels which is same with the number of categories. Their outputs are merged by element-wise multiplication and average-pooled to a feature vector in step 2. In step 3, instead of an average-pooling, $ f{sr} $ follows. $ f{sr} $ is implemented as three convolution layers with ReLU nonlinearity followed by one fully-connected layer as shown in the following figure.

$ conv4 $ is composed of single-channel filters. In Caffe, it can be implemnted using “group”. Such design is because one label may only semantically relate to a small number of other labels, and measuring spatial relations with those unrelated attention maps is unnecessary.

The last flower in this month.

It’s been less input to me recently so that less output from me. This month is really busy. It’s time to keep up!

I think I am a single-thread processor. It’s really hard for me to handle multiple tasks simultaneously. I’d become worried about another one if I’m working on one task, which means that I might mess up with the current one. I don’t know whether anyone can do it better. Besides the single-thread thing, sometimes I become too anxious to having any mood to do anything, like keeping a diary, reading paper or picking up a habit. Maybe I’m too narrow-minded??