Another week.

Another week.

I’m always looking for the brightest sunshine!

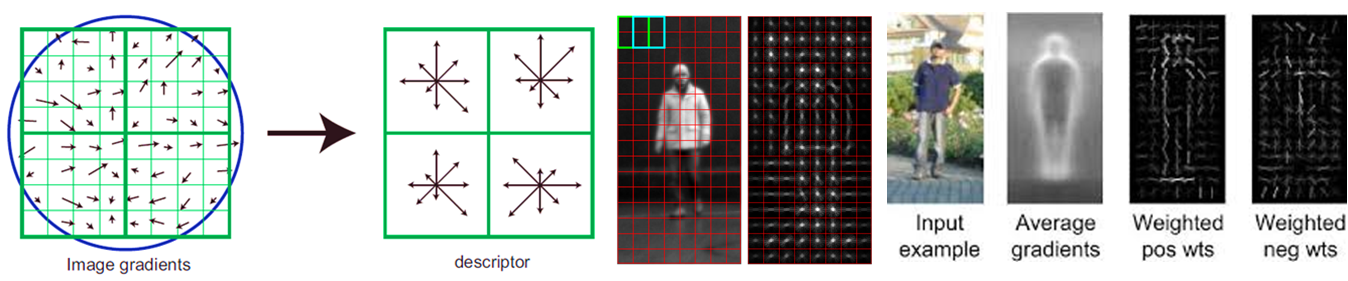

If Block size = 16*16, Block stride = 8, Cell size = 8*8, Bin size = 9, Slide-window size = 128*64, then HOG feature is a 3780-d feature. #Block=((64-16)/8+1)*((128-16)/8+1)=105, #Cell=(16/8)*(16/8)=4, 105*4*9=3780

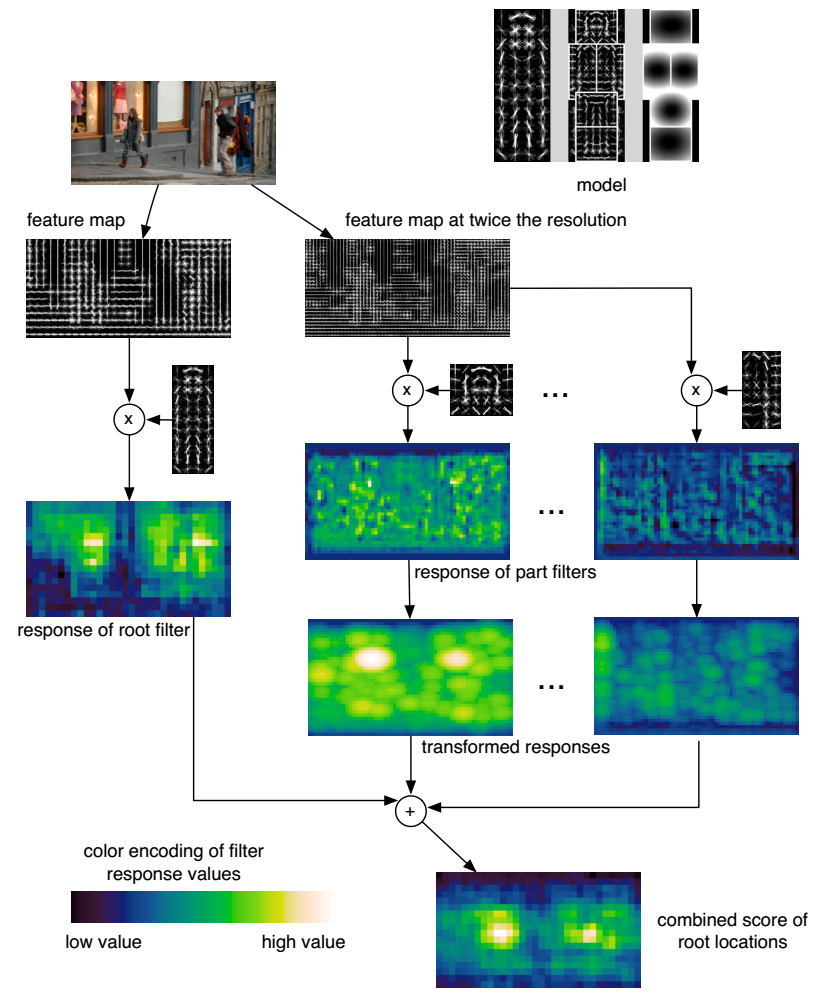

This transformation spreads high filter scores to nearby locations, taking into account the deformation costs.

The overall root scores at each level can be expressed by the sum of the root filter response at that level, plus shifted versions of transformed and sub-sampled part responses.

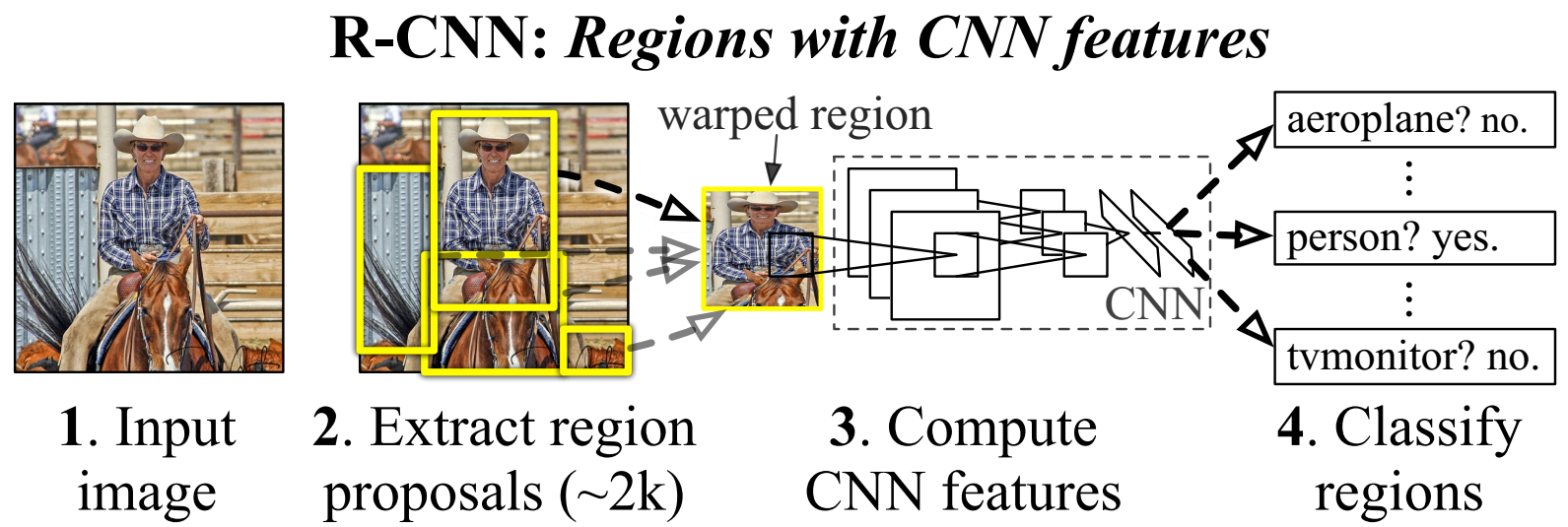

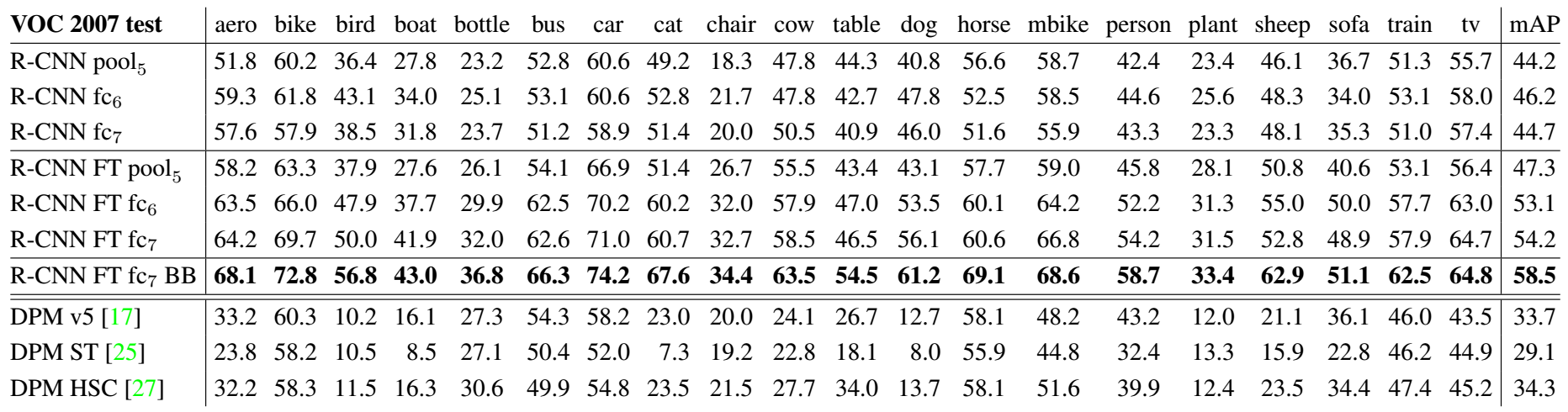

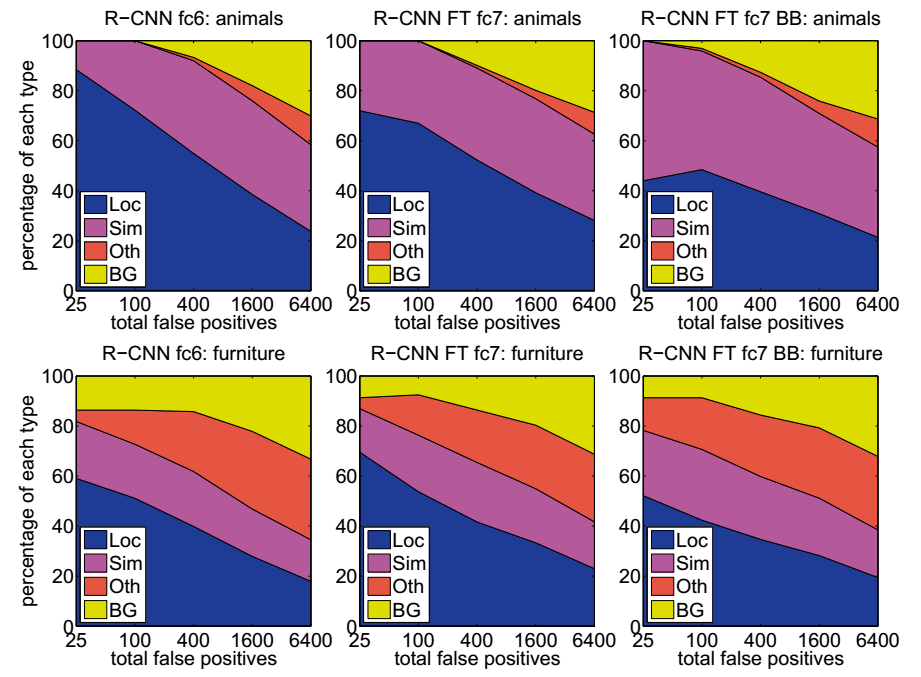

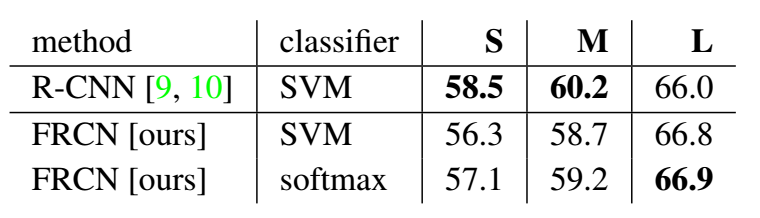

Fine-tuned on proposals with N+1 classes without any modification to the network

SVM for each category

Bounding-box regression

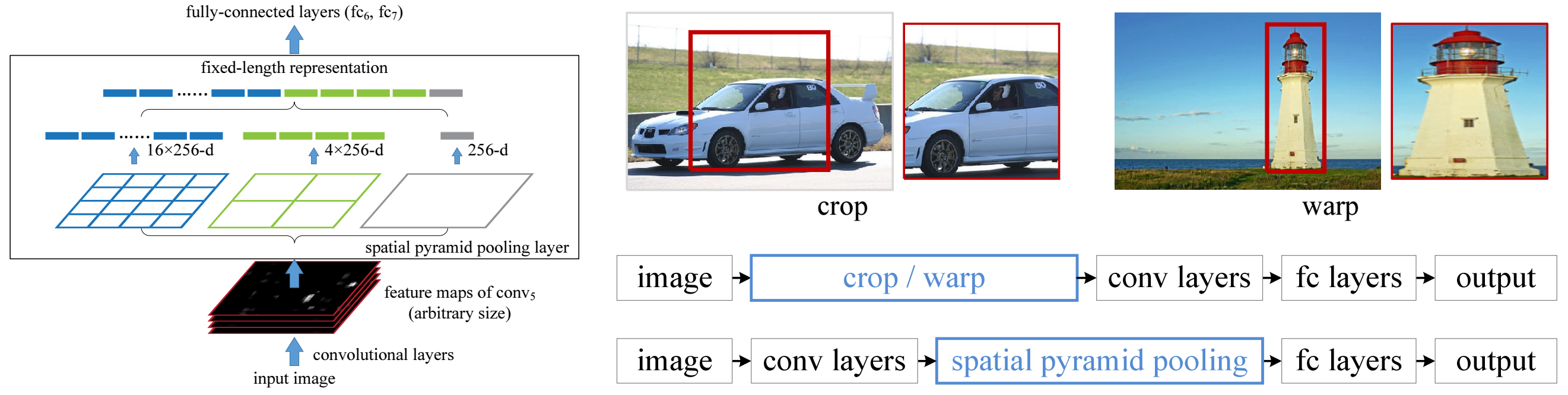

Convert arbitrary input size to fixed length

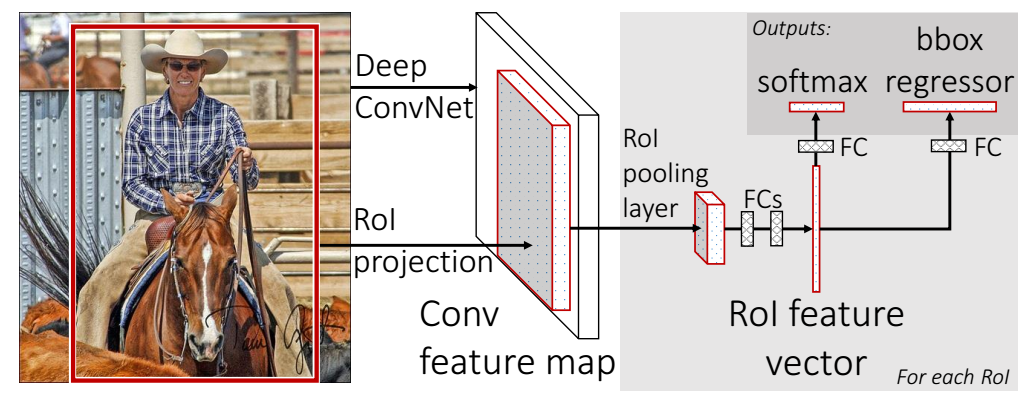

Fine-tuned with N+1 classes and two sibling layers

The rest of the ROIs have IOU [0.1, 0.5) with ground-truth as background samples

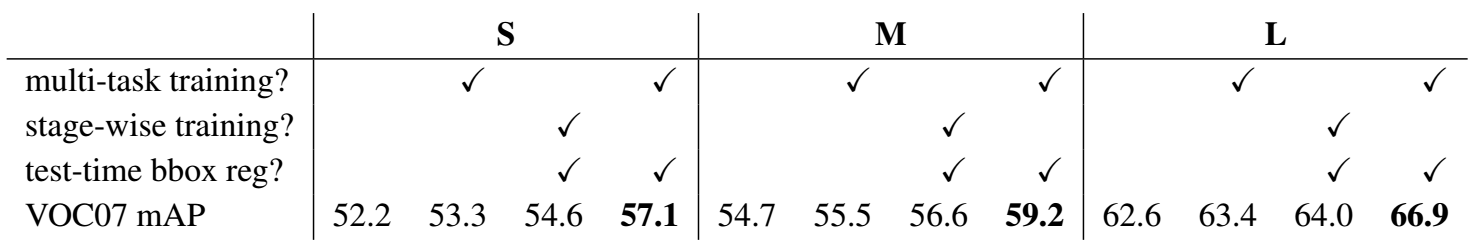

Multi-task loss, one loss for classification and one for bounding box regression

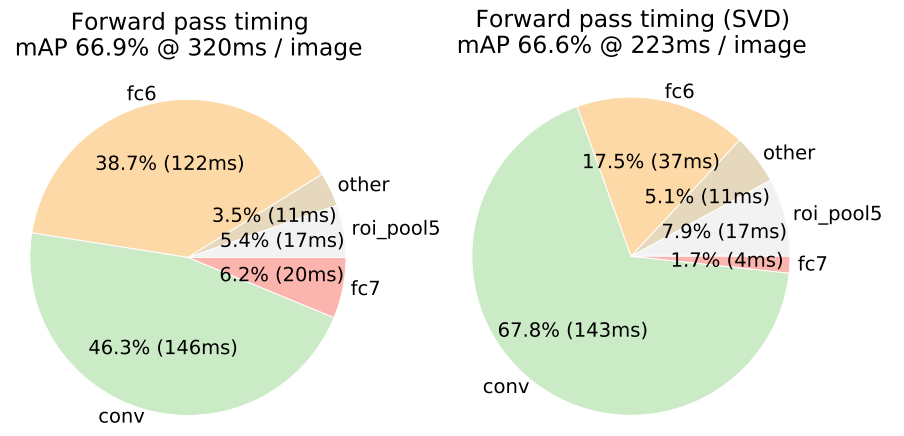

Accelerate using truncated SVD

Implemented by using two FCs without non-linear activation

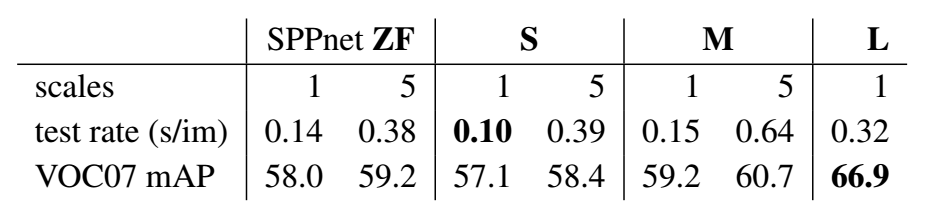

Training time

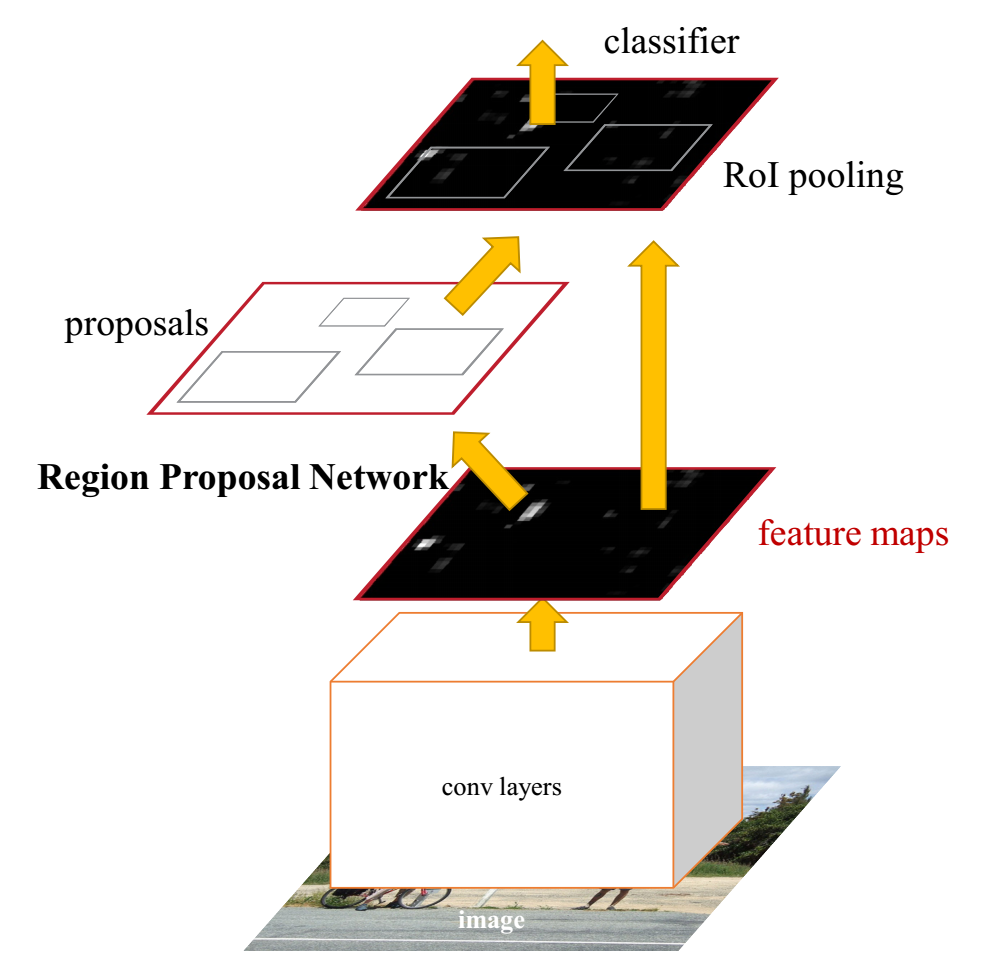

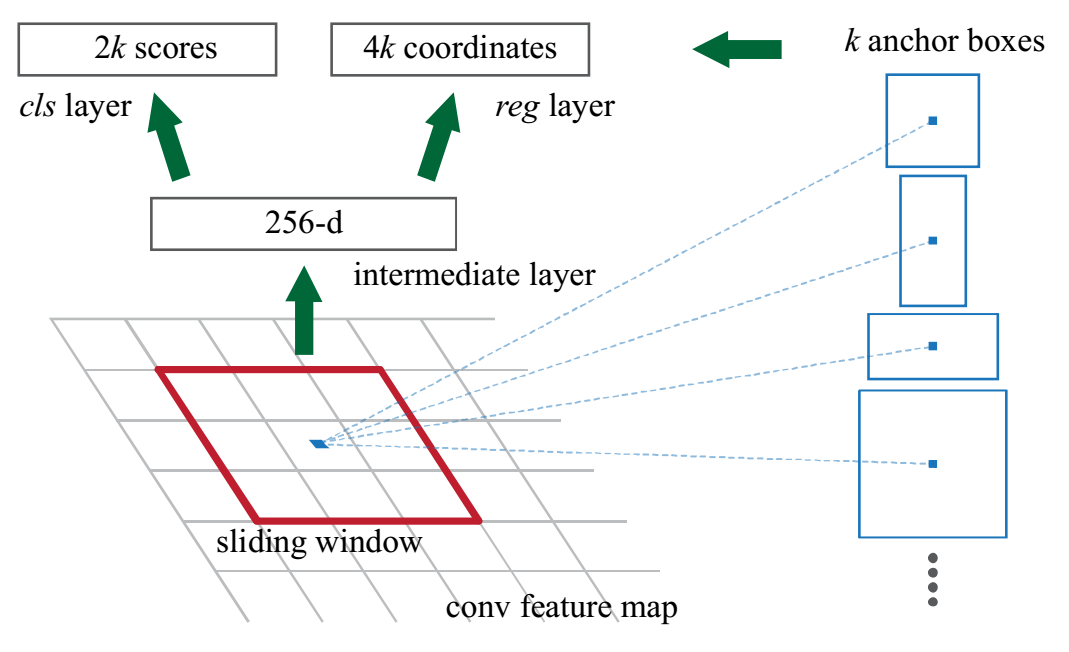

Anchors

Translation-Invariant

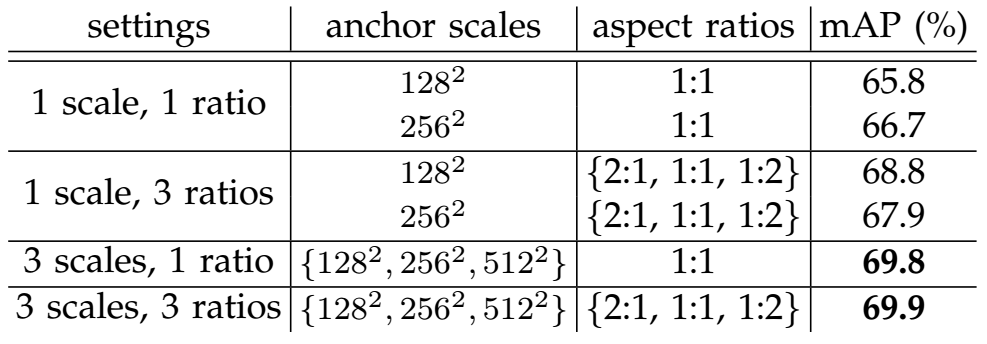

Multi-Scale and Multi-Ratio

Objectness and Localization

Sharing Features for RPN and Fast-RCNN

Alternating Training

Training RPN

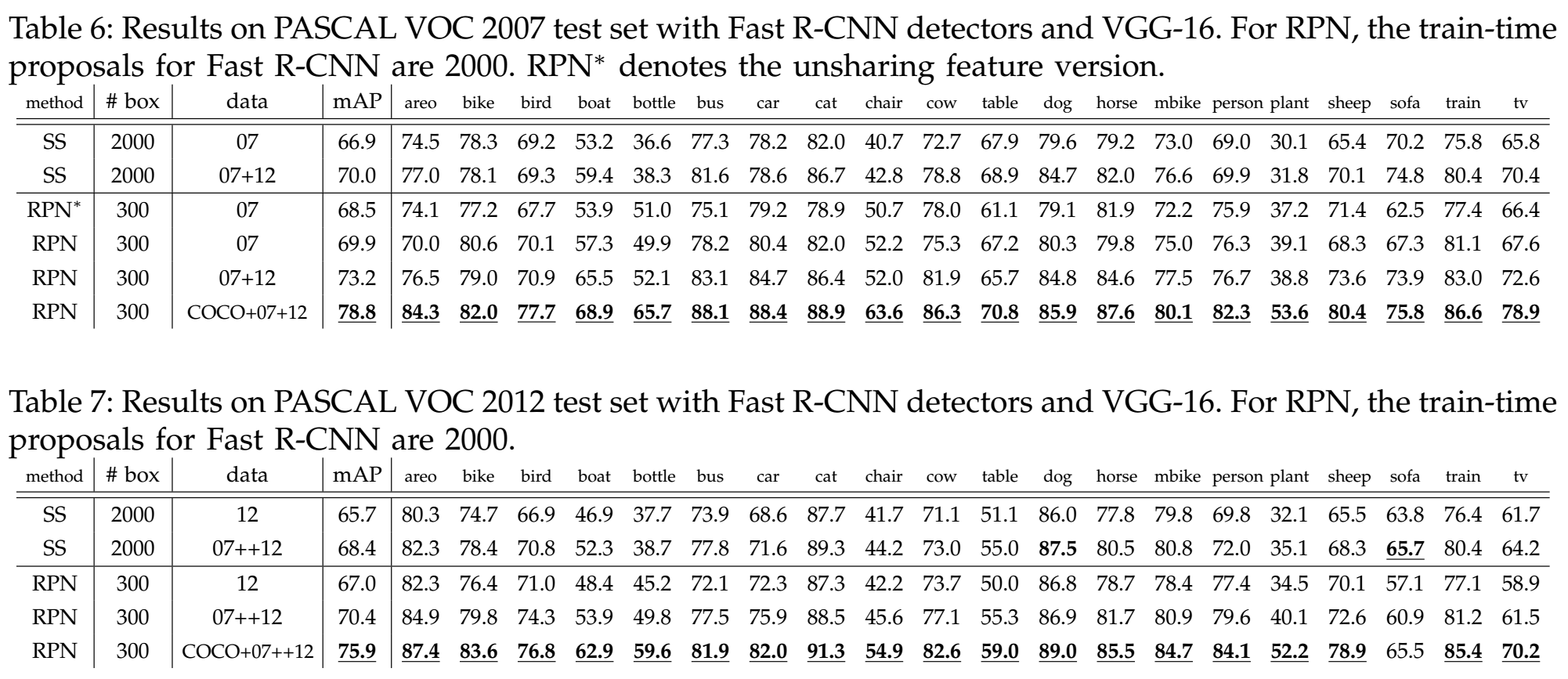

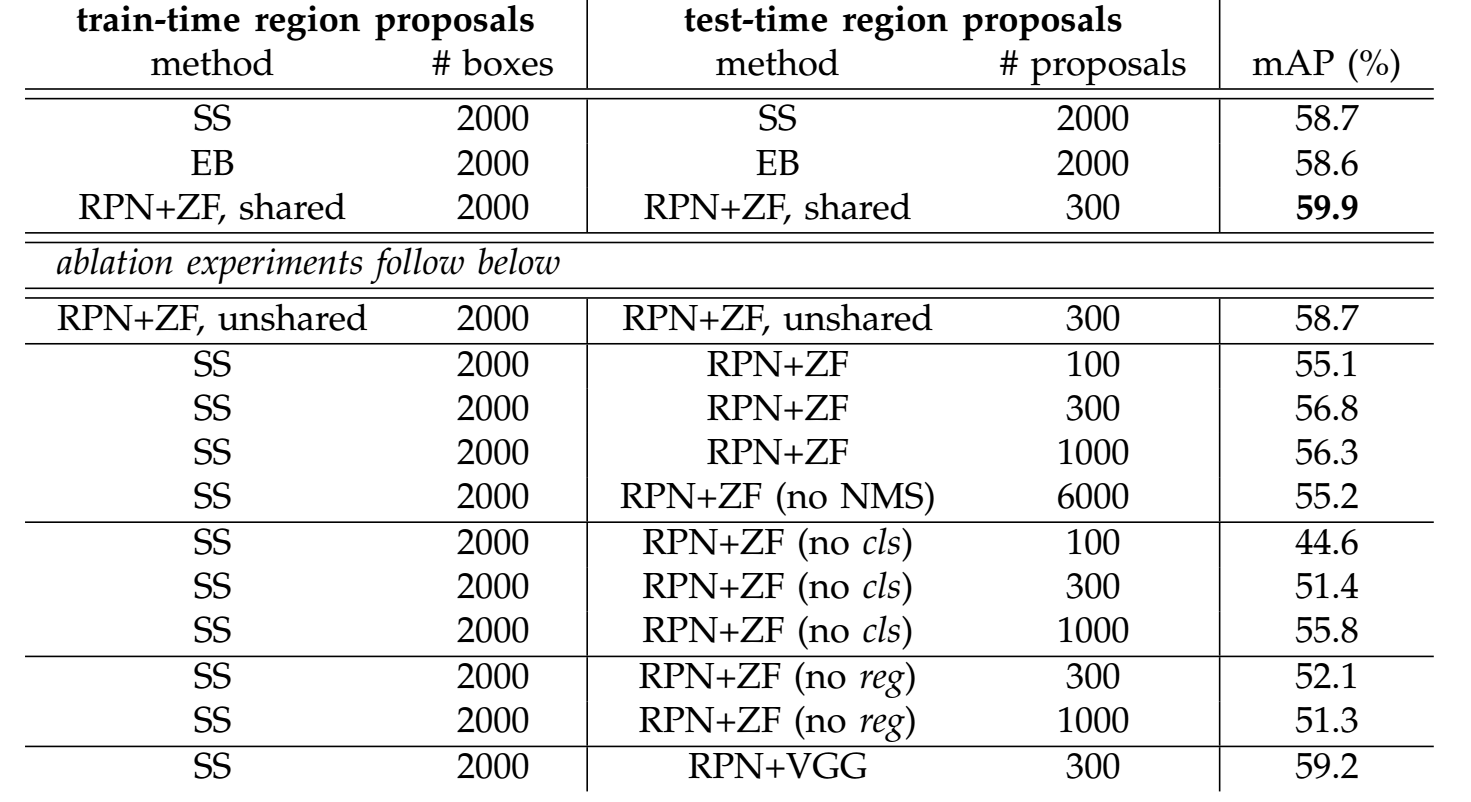

Ablation on RPN

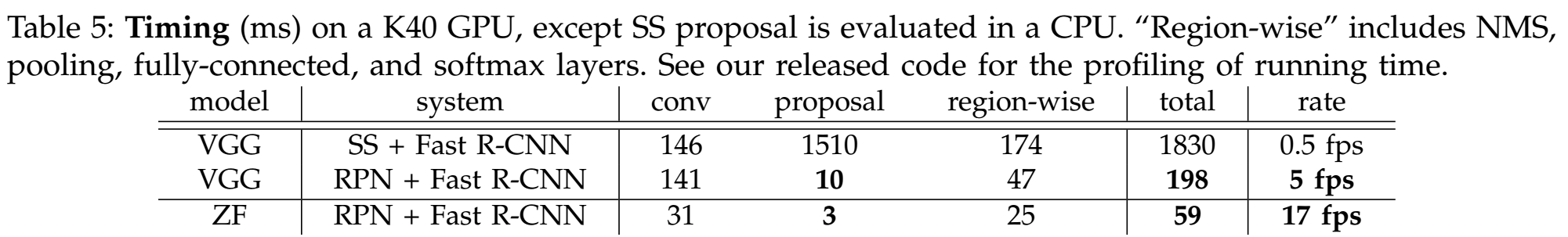

Timing

Anchors

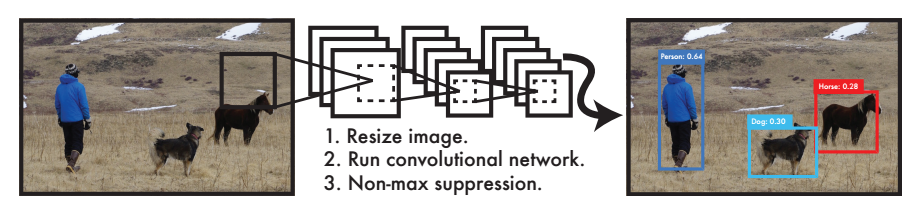

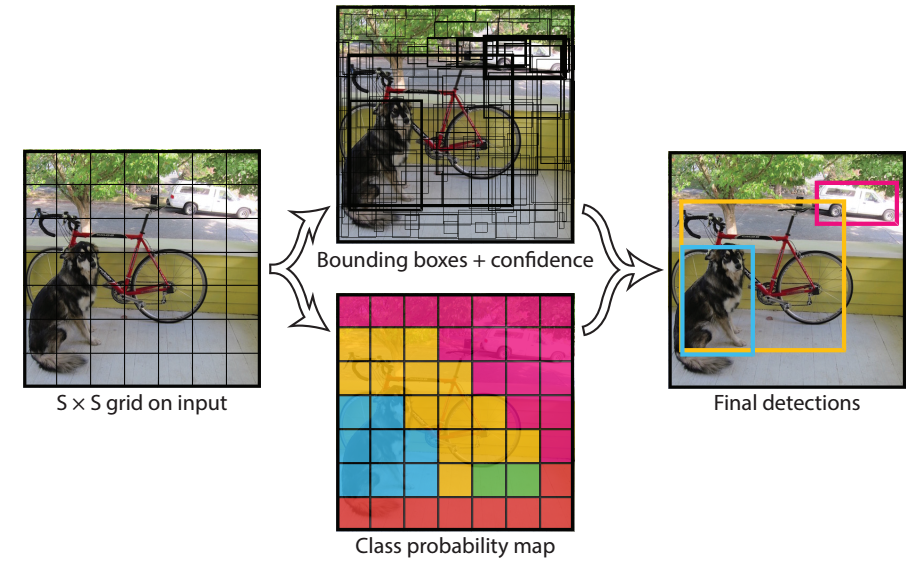

5 predictions for one bounding box: x, y, w, h, score

Each grid cell predicts C conditional class probabilities

Too few bounding boxes

Data driven

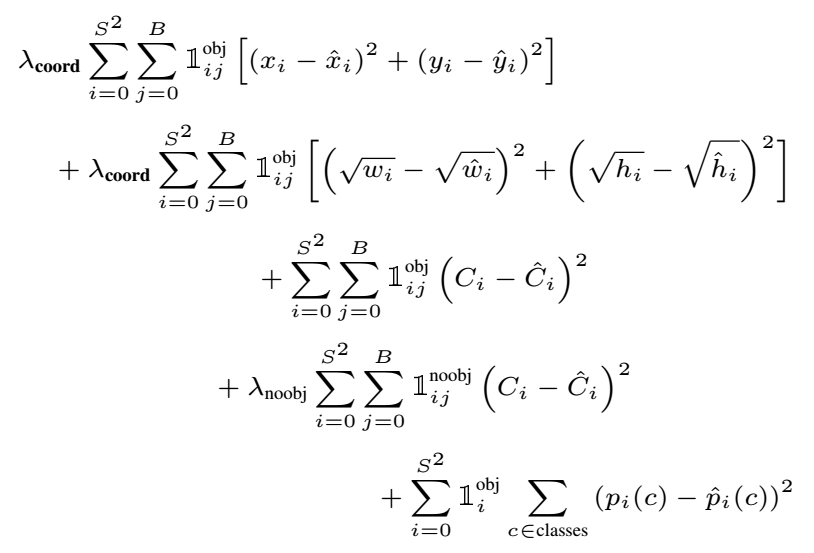

Tricks to balance loss

Square root: large object vs. small object

“Warm up” to start training

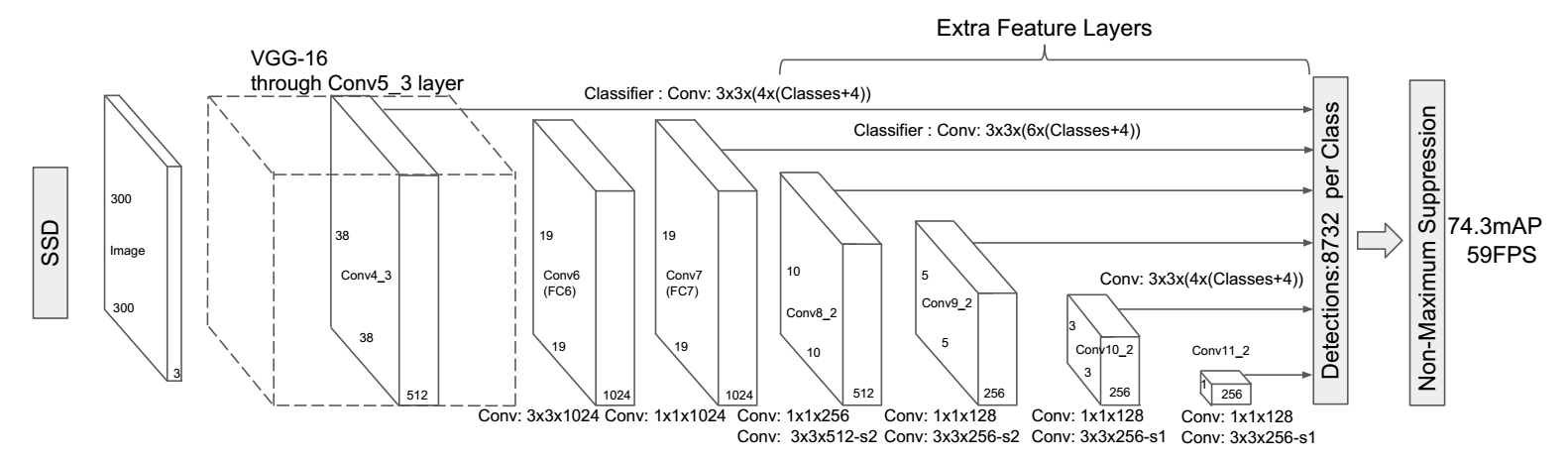

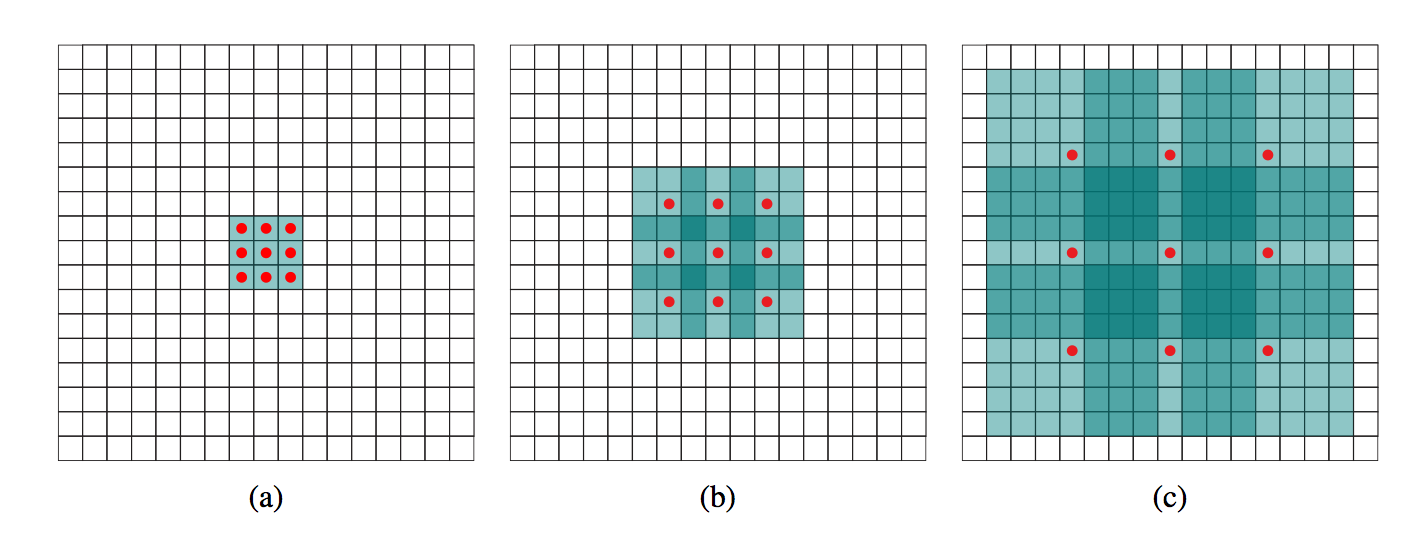

Multi-scale and Multi-ratio anchors

Multi-scale feature maps for detection

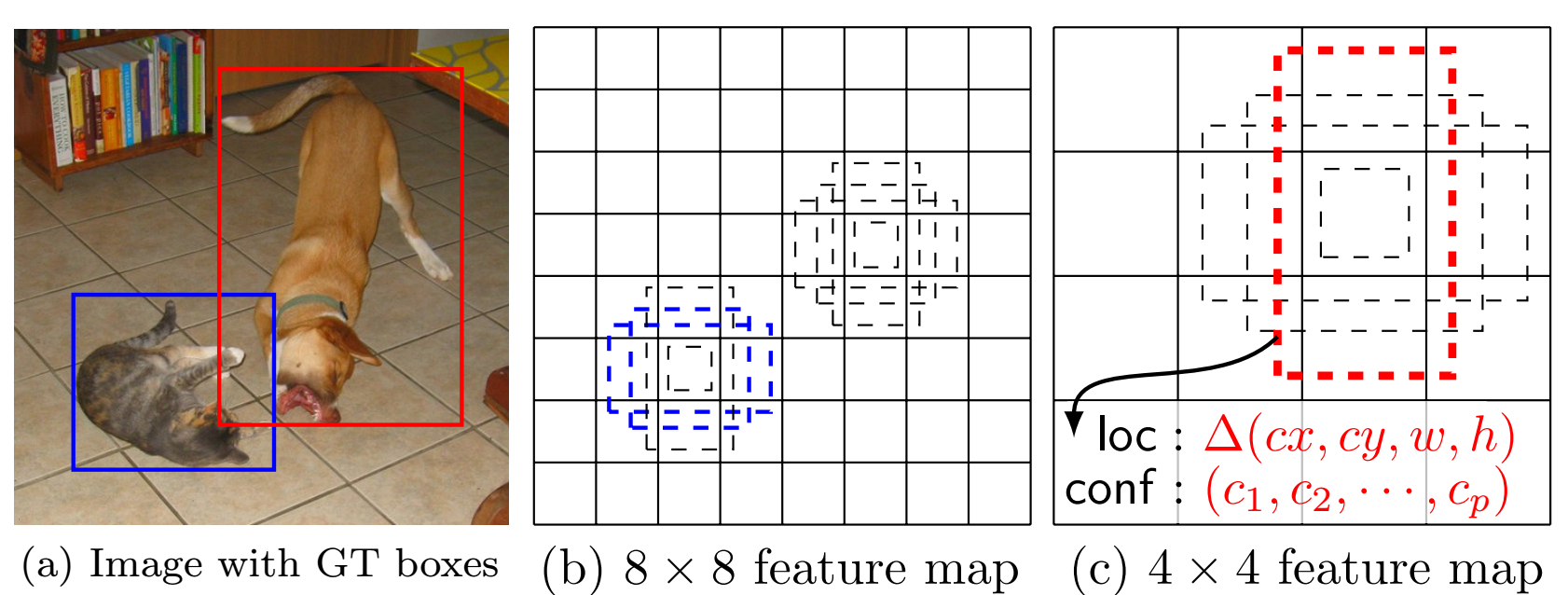

Matching anchors with ground-truth

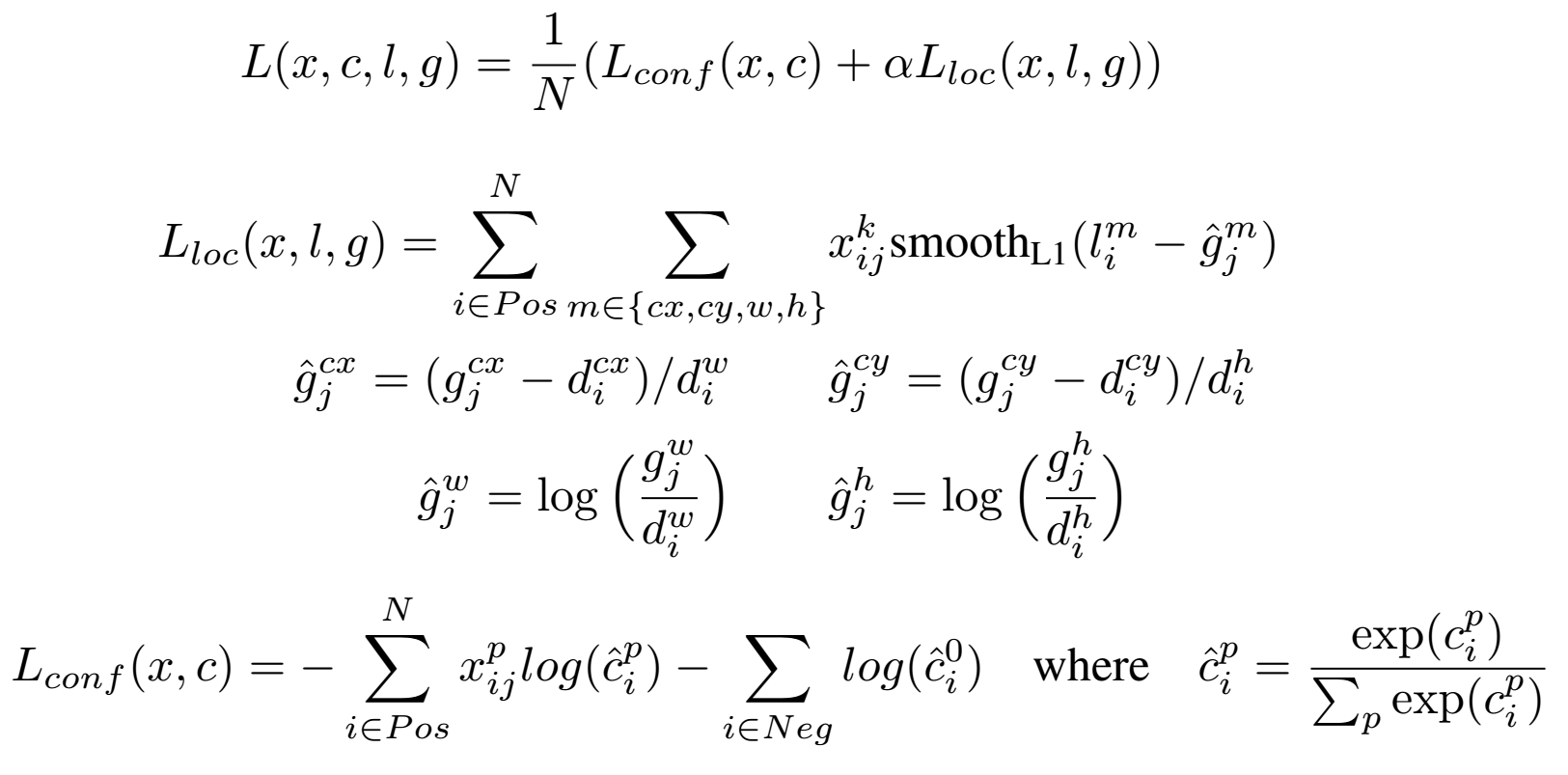

Training objective

Scales and aspect ratios for anchors

Hard negative mining

Data augmentation

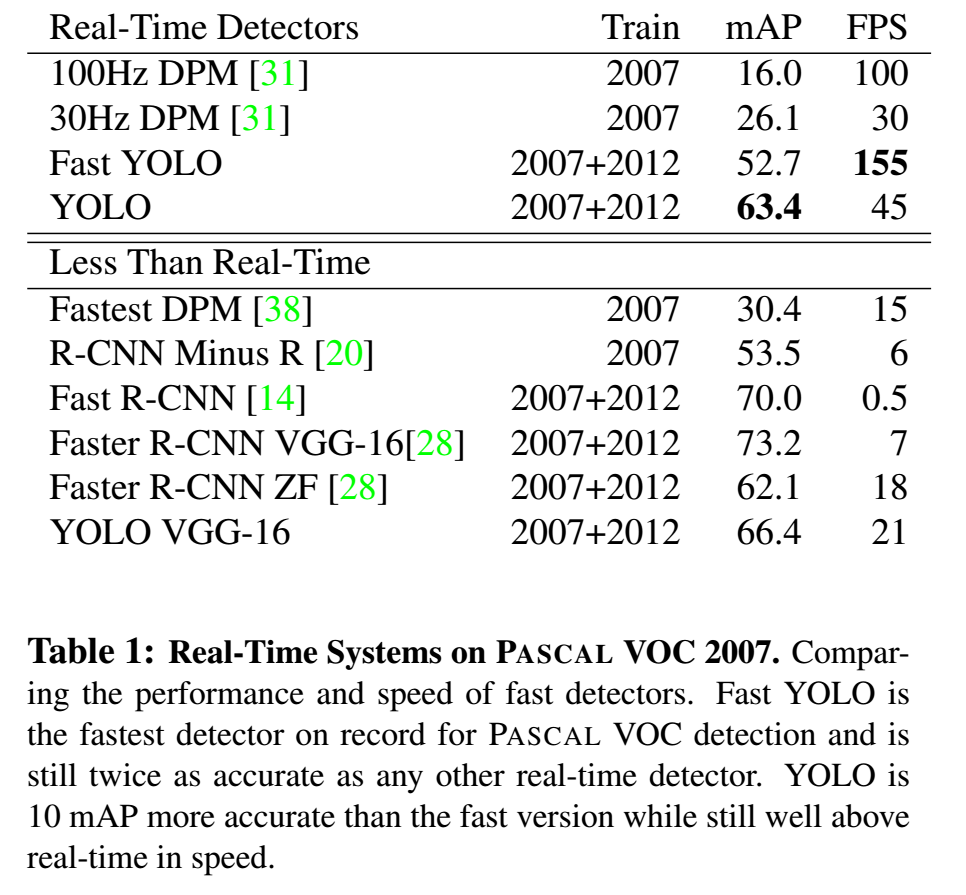

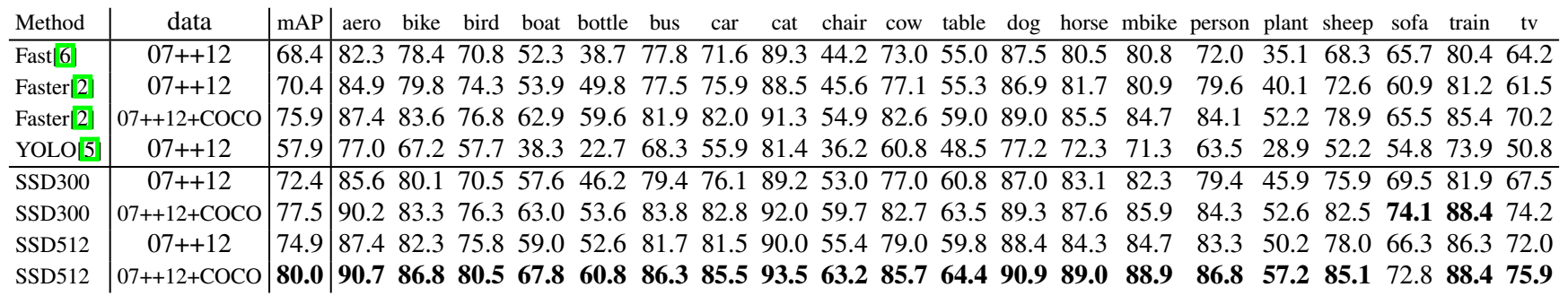

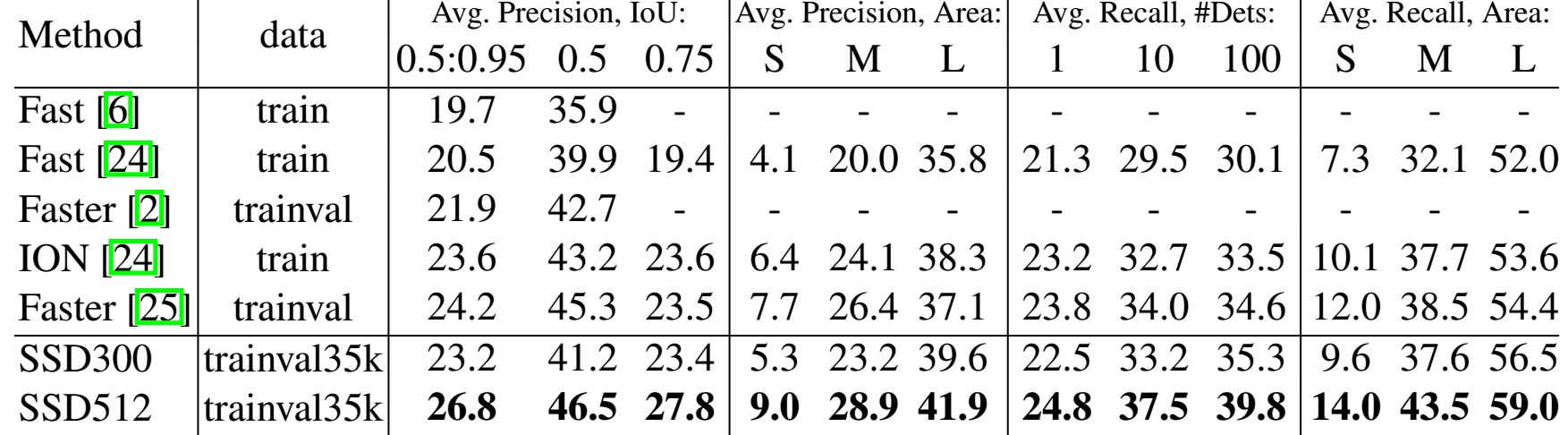

Comparison

PVANET: Deep but Lightweight Neural Networks for Real-time Object Detection (Reading Note)

Inside-Outside Net: Detecting Objects in Context with Skip Pooling and Recurrent Neural Networks (Reading Note)

R-FCN: Object Detection via Region-based Fully Convolutional Networks (Reading Note)

Feature Pyramid Networks for Object Detection (Reading Note)

Beyond Skip Connections: Top-Down Modulation for Object Detection (Reading Note)

YOLO9000: Better, Faster, Stronger (Reading Note)

DSSD: Deconvolutional Single Shot Detector (Reading Note)

Maybe when we are alone we could have an opportunity to look into our deepest corners in our hearts. And we would find that much nasty and evil thoughts there, hatred, jealousy, prejudices and so on. When and how could we find true peace and settlement? And how to be less anxious in this wold of fierce competition.

Well, today I think I made an important decision for my future and let’s see what will happen!

I ordered flower from an App that some randomly selected flowers will be sent to me every Monday morning. The flower brings liveliness to my life and cheers me up.

It’s been really great to see the wold.

Yesterday I installed Qt Creator for Qt 5.8 on my Linux laptop. Everything was all right but I couldn’t to figure out how to conveniently start this IDE, needing to go to the bin directory to click the executable file. I’ve tried to start it from commond line, but the IDE will crash when openning a dialog window. So I decided to create a desktop shortcut to bypass.

To manually create a desktop shortcut, we can create a .desktop file and place it in either /usr/share/applications or ~/.local/share/applications. A typical .desktop file looks like the following.

[Desktop Entry]

Encoding=UTF-8

Version=1.0 # version of an app.

Name[en_US]=yEd # name of an app.

GenericName=GUI Port Scanner # longer name of an app.

Exec=java -jar /opt/yed-3.11.1/yed.jar # command used to launch an app.

Terminal=false # whether an app requires to be run in a terminal.

Icon[en_US]=/opt/yed-3.11.1/icons/yicon32.png # location of icon file.

Type=Application # type.

Categories=Application;Network;Security; # categories in which this app should be listed.

Comment[en_US]=yEd Graph Editor # comment which appears as a tooltip.

Using VIM is always a geek thing. However, it seems to be exausting to remember large numbers of commonds and shortcuts. Perhaps some really cool plugins can help alleviate the pain.