Sunday was really sunny! Beautiful breakfast, beautiful day!

Sunday was really sunny! Beautiful breakfast, beautiful day!

I said ten days ago that maybe I could make cakes in the futue. Then today I made this dream true. This is the first time that I made a cake, which seems to be not that hard and it brought me much sense of pleasure in this weekend.

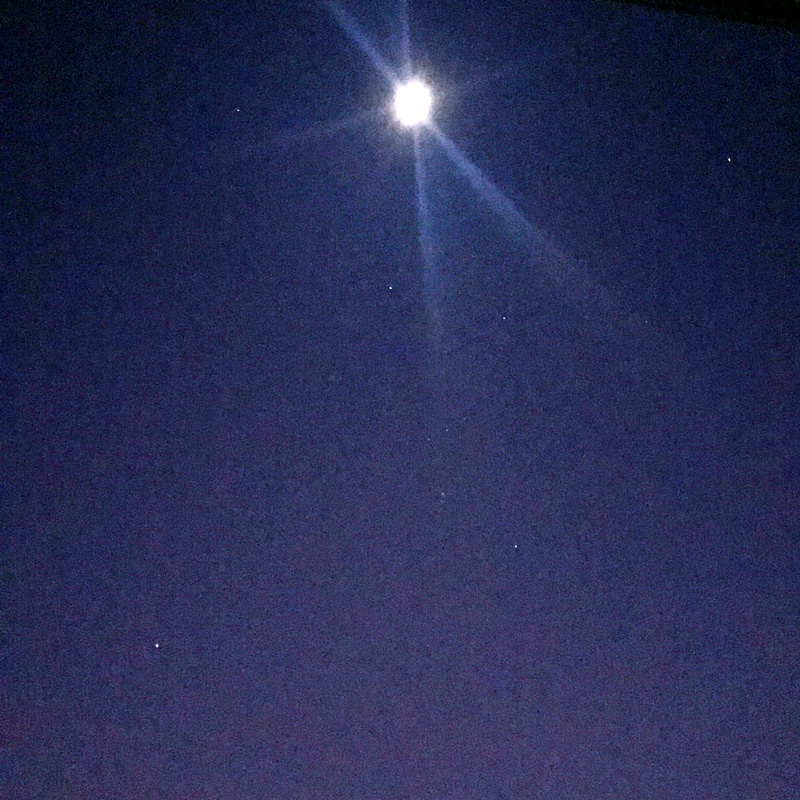

We can see the vague image of Orion right below the Moon and Alhena top left, Aldebaran top right, Sirius left bottom. I’ve not been looking at the stars for a long time! I can not even remember when was the last time I do that.

Orion was the first constellation that I learnt by reading a book telling stories and myths about the stars for kids. I was attracted by Orion because of the myth that how he became one of the constellations.

One myth recounts Gaia’s rage at Orion, who dared to say that he would kill every animal on the planet. The angry goddess tried to dispatch Orion with a scorpion. This is given as the reason that the constellations of Scorpius and Orion are never in the sky at the same time. However, Ophiuchus, the Serpent Bearer, revived Orion with an antidote. This is said to be the reason that the constellation of Ophiuchus stands midway between the Scorpion and the Hunter in the sky.

Another interesting thing is that the pyramids in Giza reflect the belt of Orion. I shared these stories with my classmates in senior school when I was giving a speech to the whole class. I think another reason that I like Orion is that I was born in winter and Orion has the brightest stars in winter.

TITLE: Understanding Convolution for Semantic Segmentation

AUTHOR: Panqu Wang, Pengfei Chen, Ye Yuan, Ding Liu, Zehua Huang, Xiaodi Hou, Garrison Cottrell

ASSOCIATION: UC San Diego, CMU, UIUC, TuSimpl

FROM: arXiv:1702.08502

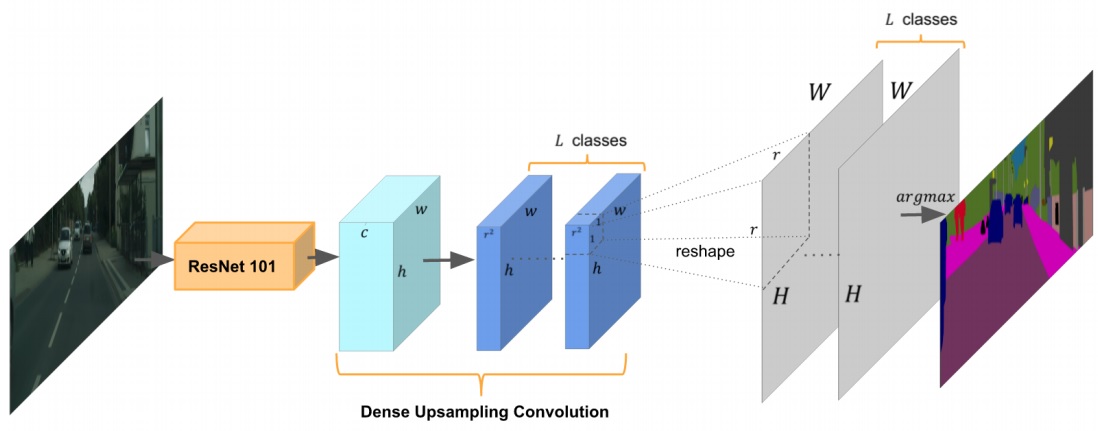

DUC is illustrated as the following figure.

The key idea of DUC is to divide the whole label map into equal subparts which have the same height and width as the incoming feature map. Every feature map in the dark blue part is a corner or a part of the whole output.

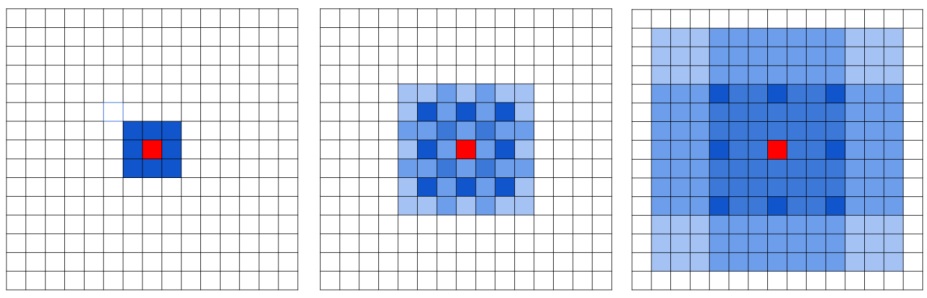

HDC is illustrated as the following figure.

Instead of using the same dilation rate for all layers after the downsampling occurs, a different dilation rate for each layer is used. The pixels (marked in blue) contributes to the calculation of the center pixel (marked in red) through three convolution layers with kernel size 3 × 3. Subsequent convolutional layers have dilation rates of r = 1, 2, 3, respectively.

Wow~~~ It’s the first time that I baked something. Hmm… perhaps I should call them caterpillar cookies :) Maybe in the future I can make cakes.

TITLE: Learning to Detect Human-Object Interactions

AUTHOR: Yu-Wei Chao, Yunfan Liu, Xieyang Liu, Huayi Zeng, Jia Deng

ASSOCIATION: University of Michigan Ann Arbor, Washington University in St. Louis

FROM: arXiv:1702.05448

HO-RCNN detects HOIs in two in two steps.

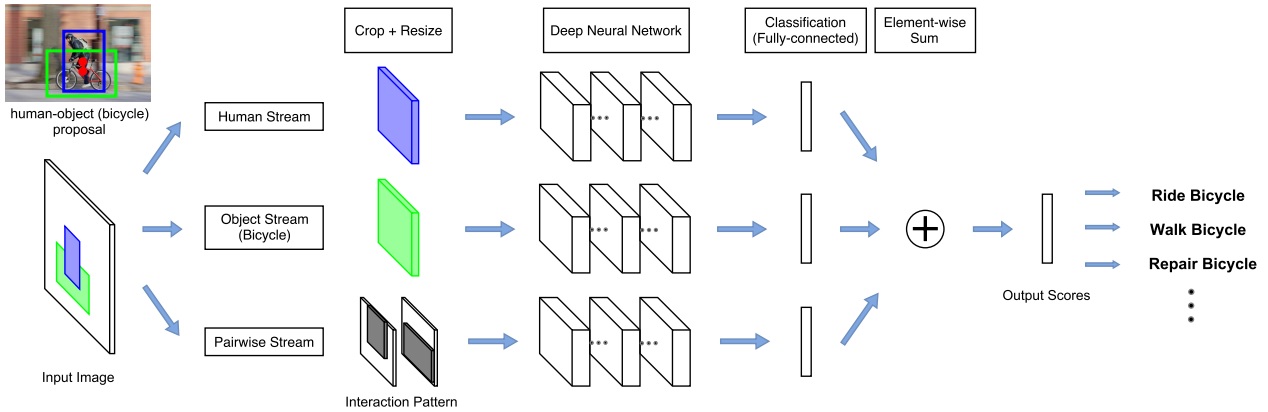

The network adopts a multi-stream architecture to extract features on the detected humans, objects, and human-object spatial relations, as the following figure illustrated.

Assuming a list of HOI categories of interest (e.g. “riding a horse”, “eating an apple”) is given beforehand, bounding boxes for humans and the object categories of interest (e.g. “horse”, “apple”) are generated by detectors. Th human-object proposals are generated by pairing the detected humans and the detected objects of interest.

The multistream architecture is composed of three streams

The last layer of each stream is a binary classifier that outputs a confidence score for the HOI. The final confidence score is obtained by summing the scores over all streams.

Human and Object Stream

An image patch is cropped according to the bounding box (human/object) and is resized to a fixed size. Then the image patch is sent to a CNN to be classified and given an confidence for a HOI.

Pairwise Stream

Given a pair of bounding boxes, its Interaction Pattern is a binary image with two channels: The first channel has value 1 at pixels enclosed by the first bounding box, and value 0 elsewhere; the second channel has value 1 at pixels enclosed by the second bounding box, and value 0 elsewhere. In this work, the first bounding box is for humans, and the second bounding box is for objects.

The Interaction Patterns should be invariant to any joint translations of the bounding box pair. The pixels outside the “attention window”, i.e. the tightest window enclosing the two bounding boxes, are removed from the Interaction Pattern. the aspect ratio of Interaction Patterns should be fixed. Two methods are used. One wrap the patch, the other one extend the shorter side of the patch to meet the required ratio.

To extend to mulitple HOI classes, one binary classifier is trained for each HOI class at the last layer of each stream. The final score is summed over all streams separately for each HOI class.

Moved to new apartment.

TITLE: Adversarial Discriminative Domain Adaptation

AUTHOR: Eric Tzeng, Judy Hoffman, Kate Saenko, Trevor Darrell

ASSOCIATION: UC Berkeley, Stanford University, Boston University

FROM: arXiv:1702.05464

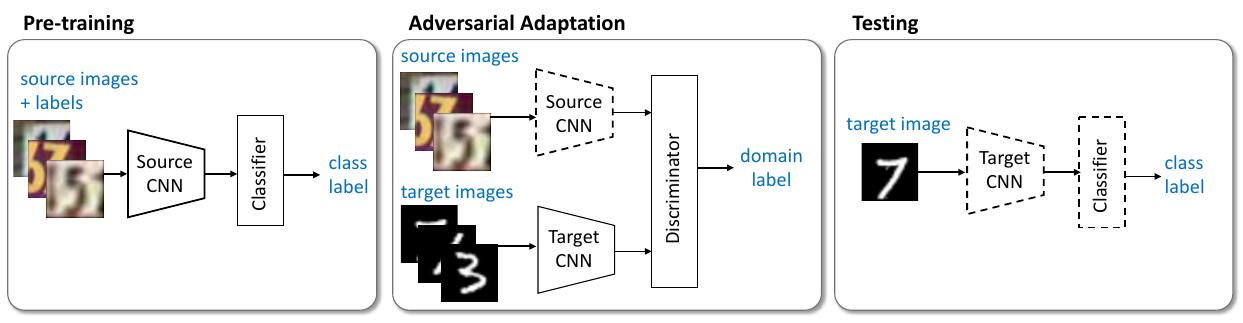

The main idea of this work is to find a map function project target data, the data for testing, to the source data domain, that are used for training. The training procedure is illustrated in the following figure.

As the figure shows, first pre-train a source encoder CNN using labeled source image examples. Next, perform adversarial adaptation by learning a target encoder CNN such that a discriminator that sees encoded source and target examples cannot reliably predict their domain label. During testing, target images are mapped with the target encoder to the shared feature space and classified by the source classifier. Dashed lines indicate fixed network parameters.

The ADDA method can be formalized as:

The first formula is a typical supervised learning. The second formula is just like what has been proposed in GAN. It is used to learn a discriminator to tell target data from source data. The first term can be ignored because $M_s$ is fixed. The third formula is used to learn a $M_t$ mapping data from targe domain to source domain.

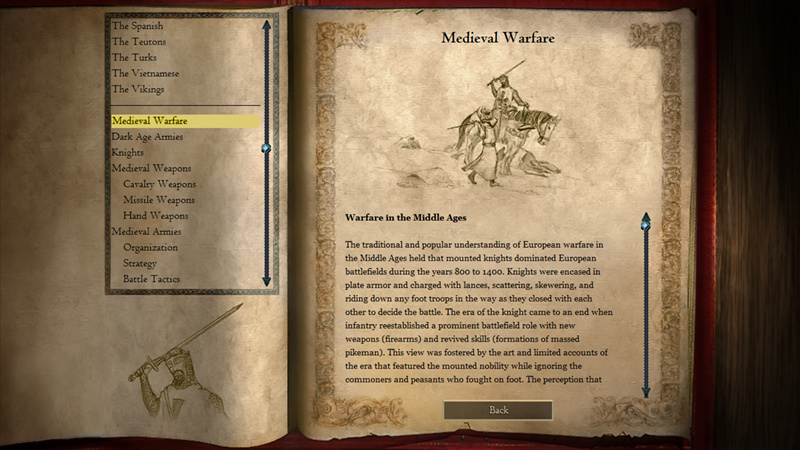

I’ve been a great fun of ancient history and warfares since my childhood. Maybe the first enlightenment comes from the serial computer games Age of Empires. I didn’t know Joan of Arc until I played the campaign in Age of Empires II when I was 11 or 12 years old. The story in the ganme was so attractive that I searched who Joan of Arc was and what she did on Internet. I was touched by her patriotic acts and sacrifices. I even began to be interested in France history and wanted to study French in university, though I finally chose EE as my major and became an engineer in AI.

I played Age of Empires II HD a while this weekends because I found it was on sale in Steam. It brought me back to the time of my childhood. The memories of playing this game with my friends were recalled. We had fun playing this game, read stories of heroes and quarrelled about who was the greatest one in history. This is a classic computer game.